Image features¶

In this chapter we will describe a set of quantitative image features together with the reference values established by the IBSI. This feature set builds upon the feature sets proposed by [Aerts2014] and [Hatt2016], which are themselves largely derived from earlier works. References to earlier work are provided whenever they could be identified.

Reference values were derived for each feature. A table of reference values contains the values that could be reliably reproduced, within a tolerance margin, for the reference data sets (see Reference data sets). Consensus on the validity of each reference value is also noted. Consensus can have four levels, depending on the number of teams that were able to produce the same value during the standardization process: weak (\(<3\) matches), moderate (\(3\) to \(5\) matches), strong (\(6\) to \(9\) matches), and very strong (\(\geq 10\) matches). If consensus on a reference value was weak or if it could not be reproduced by an absolute majority of teams, it was not considered standardized. Such features do currently not have reference values, and should not be used.

The set of features can be divided into a number of families, of which intensity-based statistical, intensity histogram-based, intensity-volume histogram-based, morphological features, local intensity, and texture matrix-based features are treated here. All texture matrices are rotationally and translationally invariant. Illumination invariance of texture matrices may be achieved by particular image post-acquisition schemes, e.g. histogram matching. None of the texture matrices are scale invariant, a property which can be useful in many (biomedical) applications. What the presented texture matrices lack, however, is directionality in combination with rotation invariance. These may be achieved by local binary patterns and steerable filters, which however fall beyond the scope of the current work. For these and other texture features, see [Depeursinge2014].

Features are calculated on the base image, as well as images transformed using wavelet or Gabor filters). To calculate features, it is assumed that an image segmentation mask exists, which identifies the voxels located within a region of interest (ROI). The ROI itself consists of two masks, an intensity mask and a morphological mask. These masks may be identical, but not necessarily so, as described in the section on Re-segmentation.

Several feature families require additional image processing steps before feature calculation. Notably intensity histogram and texture feature families require prior discretisation of intensities into grey level bins. Other feature families do not require discretisation before calculations. For more details on image processing, see Fig. 1 in the previous chapter.

Below is an overview table that summarises image processing requirements for the different feature families.

| ROI mask | ||||

|---|---|---|---|---|

| Feature family | count | morph. | int. | discr. |

| morphology | 29 | ✔ | ✔ | ✕ |

| local intensity | 2 | ✕ | ✔ a | ✕ |

| intensity-based statistics | 18 | ✕ | ✔ | ✕ |

| intensity histogram | 23 | ✕ | ✔ | ✔ |

| intensity-volume histogram | 5 | ✕ | ✔ | ✔ b |

| grey level co-occurrence matrix | 25 | ✕ | ✔ | ✔ |

| grey level run length matrix | 16 | ✕ | ✔ | ✔ |

| grey level size zone matrix | 16 | ✕ | ✔ | ✔ |

| grey level distance zone matrix | 16 | ✔ | ✔ | ✔ |

| neighbourhood grey tone difference matrix | 5 | ✕ | ✔ | ✔ |

| neighbouring grey level dependence matrix | 17 | ✕ | ✔ | ✔ |

Though image processing parameters affect feature values, three other concepts influence feature values for many features: distance, feature aggregation and distance weighting. These are described below.

Grid distances¶

MPUJ

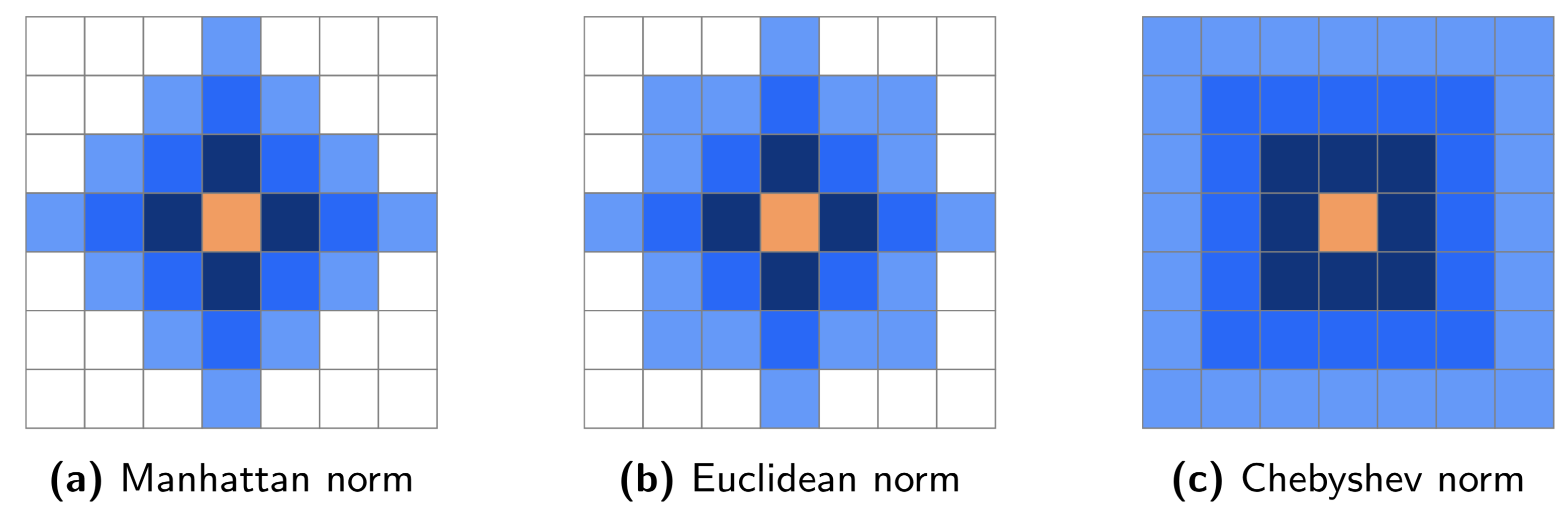

Grid distance is an important concept that is used by several feature families, particularly texture features. Grid distances can be measured in several ways. Let \(\mathbf{m}=\left(m_x,m_y,m_z\right)\) be the vector from a center voxel at \(\mathbf{k}=\left(k_x,k_y,k_z\right)\) to a neighbour voxel at \(\mathbf{k}+\mathbf{m}\). The following norms (distances) are used:

\(\ell_1\) norm or Manhattan norm (LIFZ):

\[\|\mathbf{m}\|_1 = |m_x| + |m_y| + |m_z|\]\(\ell_2\) norm or Euclidean norm (G9EV):

\[\|\mathbf{m}\|_2 = \sqrt{m_x^2 + m_y^2 + m_z^2}\]\(\ell_{\infty}\) norm or Chebyshev norm (PVMT):

\[\|\mathbf{m}\|_{\infty} = \text{max}(|m_x|,|m_y|,|m_z|)\]

An example of how the above norms differ in practice is shown in Fig. 8 .

Fig. 8 Grid neighbourhoods for distances up to \(3\) according to Manhattan, Euclidean and Chebyshev norms. The orange pixel is considered the center pixel. Dark blue pixels have distance \(\delta=1\), blue pixels \(\delta\leq2\) and light blue pixels \(\delta\leq3\) for the corresponding norm.

Feature aggregation¶

5QB6

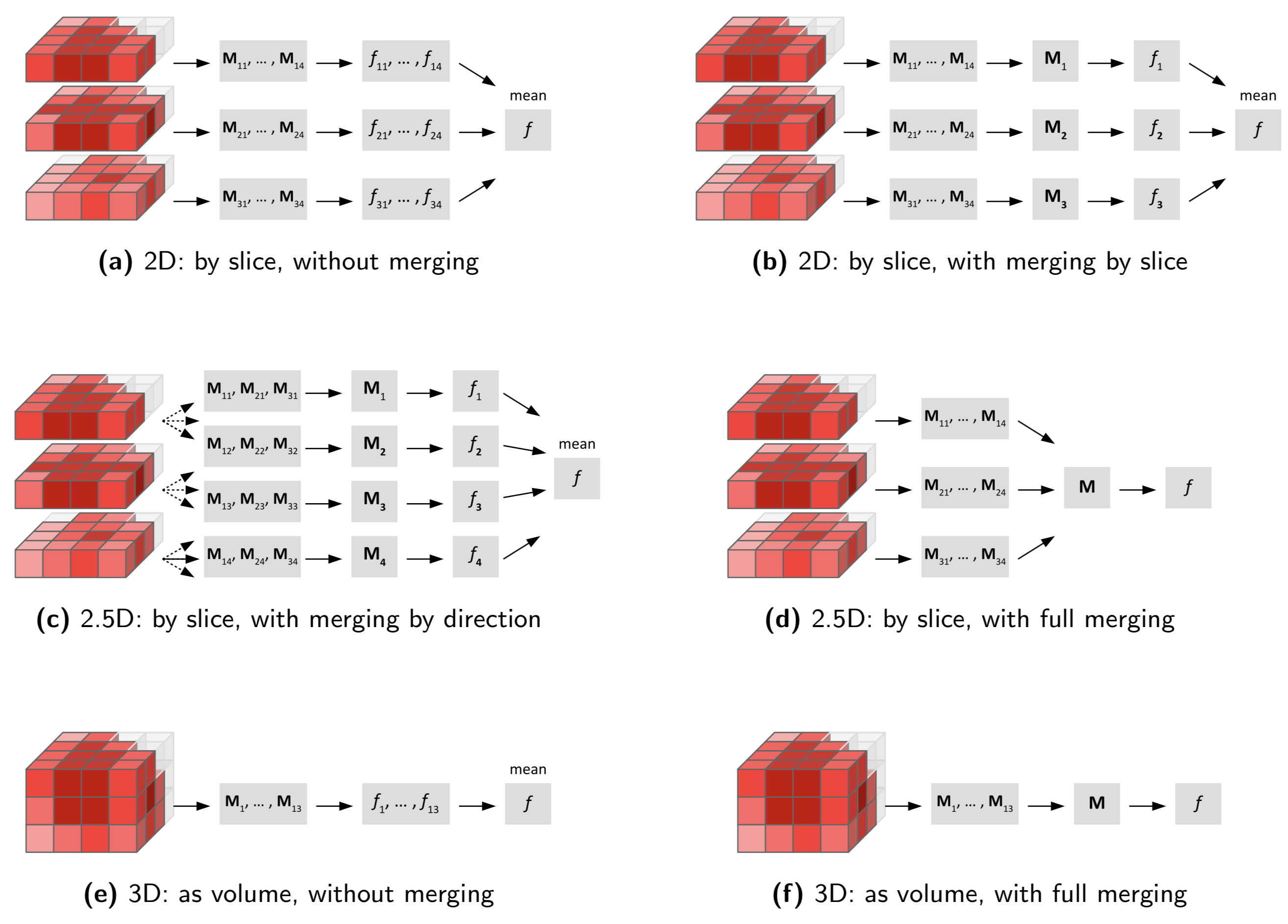

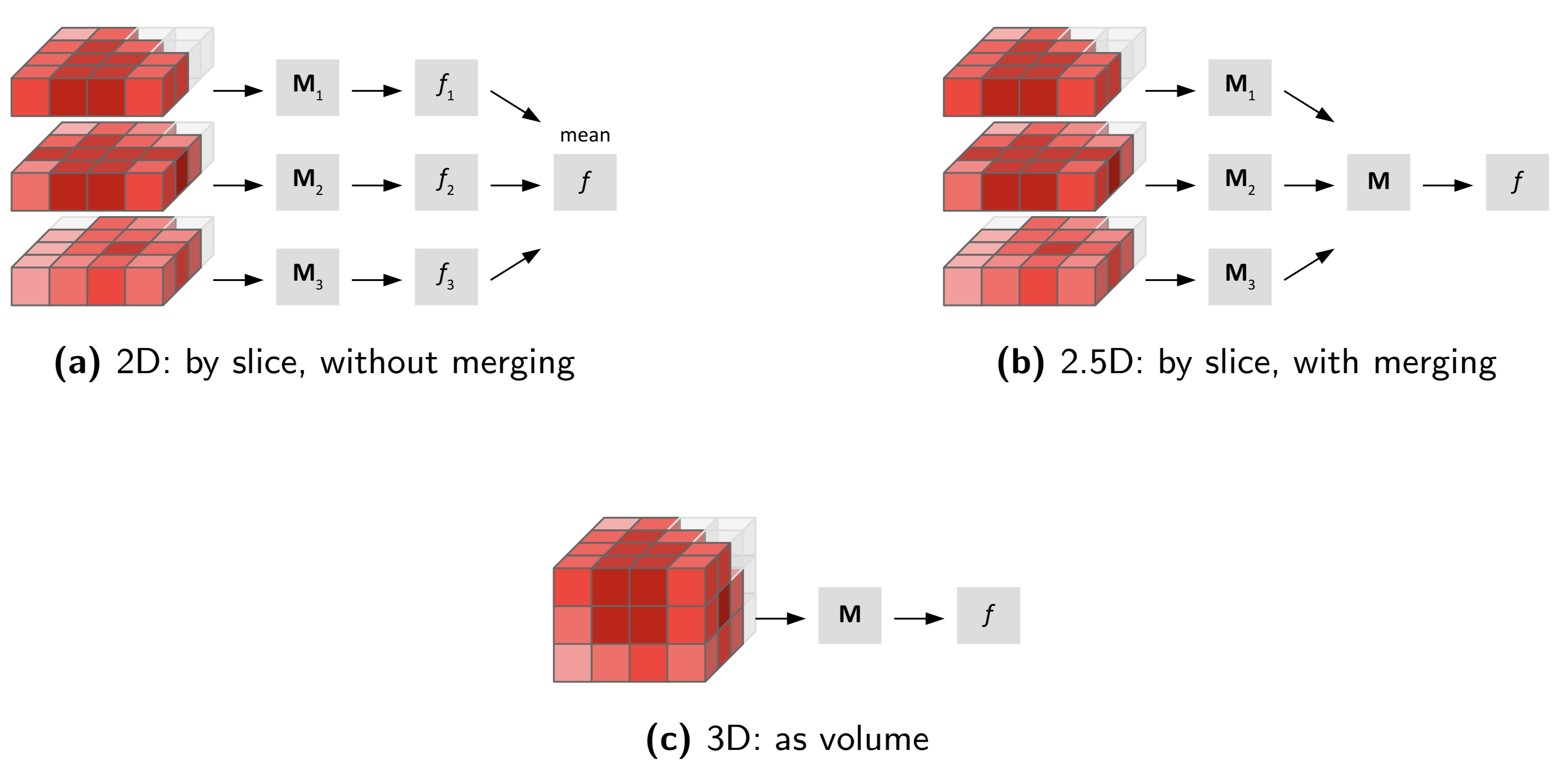

Features from some families may be calculated from, e.g. slices. As a consequence, multiple values for the same feature may be computed. These different values should be combined into a single value for many common purposes. This process is referred to as feature aggregation. Feature aggregation methods depend on the family, and are detailed in the family description.

Distance weighting¶

6CK8

Distance weighting is not a default operation for any of the texture families, but is implemented in software such as PyRadiomics [VanGriethuysen2017]. It may for example be used to put more emphasis on local intensities.

Morphological features¶

HCUG

Morphological features describe geometric aspects of a region of interest (ROI), such as area and volume. Morphological features are based on ROI voxel representations of the volume. Three voxel representations of the volume are conceivable:

- The volume is represented by a collection of voxels with each voxel taking up a certain volume (LQD8).

- The volume is represented by a voxel point set \(\mathbf{X}_{c}\) that consists of coordinates of the voxel centers (4KW8).

- The volume is represented by a surface mesh (WRJH).

We use the second representation when the inner structure of the volume is important, and the third representation when only the outer surface structure is important. The first representation is not used outside volume approximations because it does not handle partial volume effects at the ROI edge well, and also to avoid inconsistencies in feature values introduced by mixing representations in small voxel volumes.

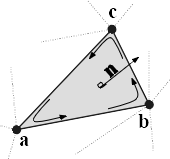

Mesh-based representation¶

A mesh-based representation of the outer surface allows consistent evaluation of the surface volume and area independent of size. Voxel-based representations lead to partial volume effects and over-estimation of the surface area. The surface of the ROI volume is translated into a triangle mesh using a meshing algorithm. While multiple meshing algorithms exist, we suggest the use of the Marching Cubes algorithm [Lorensen1987][Lewiner2003] because of its widespread availability in different programming languages and reasonable approximation of the surface area and volume [Stelldinger2007]. In practice, mesh-based feature values depend upon the meshing algorithm and small differences may occur between implementations [Limkin2019jt].

Fig. 9 Meshing algorithms draw faces and vertices to cover the ROI. One face, spanned by vertices \(\mathbf{a}\), \(\mathbf{b}\) and \(\mathbf{c}\), is highlighted. Moreover, the vertices define the three edges \(\mathbf{ab}=\mathbf{b}-\mathbf{a}\), \(\mathbf{bc}=\mathbf{c}-\mathbf{b}\) and \(\mathbf{ca}=\mathbf{a}-\mathbf{c}\). The face normal \(\mathbf{n}\) is determined using the right-hand rule, and calculated as \(\mathbf{n}=\left(\mathbf{ab} \times \mathbf{bc}\right) / \| \mathbf{ab} \times \mathbf{bc}\|\), i.e. the outer product of edge \(\mathbf{ab}\) with edge \(\mathbf{bc}\), normalised by its length.

Meshing algorithms use the ROI voxel point set \(\mathbf{X}_{c}\) to create a closed mesh. Dependent on the algorithm, a parameter is required to specify where the mesh should be drawn. A default level of 0.5 times the voxel spacing is used for marching cube algorithms. Other algorithms require a so-called isovalue, for which a value of 0.5 can be used since the ROI mask consists of \(0\) and \(1\) values, and we want to roughly draw the mesh half-way between voxel centers. Depending on implementation, algorithms may also require padding of the ROI mask with non-ROI (\(0\)) voxels to correctly estimate the mesh in places where ROI voxels would otherwise be located at the edge of the mask.

The closed mesh drawn by the meshing algorithm consists of \(N_{fc}\) triangle faces spanned by \(N_{vx}\) vertex points. An example triangle face is drawn in Fig. 9. The set of vertex points is then \(\mathbf{X}_{vx}\).

The calculation of the mesh volume requires that all faces have the same orientation of the face normal. Consistent orientation can be checked by the fact that in a regular, closed mesh, all edges are shared between exactly two faces. Given the edge spanned by vertices \(\mathbf{a}\) and \(\mathbf{b}\), the edge must be \(\mathbf{ab}=\mathbf{b}-\mathbf{a}\) for one face and \(\mathbf{ba}=\mathbf{a}-\mathbf{b}\) for the adjacent face. This ensures consistent application of the right-hand rule, and thus consistent orientation of the face normals. Algorithm implementations may return consistently orientated faces by default.

ROI morphological and intensity masks¶

The ROI consists of a morphological and an intensity mask. The morphological mask is used to calculate many of the morphological features and to generate the voxel point set \(\mathbf{X}_{c}\). Any holes within the morphological mask are understood to be the result of segmentation decisions, and thus to be intentional. The intensity mask is used to generate the voxel intensity set \(\mathbf{X}_{gl}\) with corresponding point set \(\mathbf{X}_{c,gl}\).

Aggregating features¶

By definition, morphological features are calculated in 3D (DHQ4), and not per slice.

Units of measurement¶

By definition, morphological features are computed using the unit of length as defined in the DICOM standard, i.e. millimeter for most medical imaging modalities.

If the unit of length is not defined by a standard, but is explicitly defined as meta data, this definition should be used. In this case, care should be taken that this definition is consistent across all data in the cohort.

If a feature value should be expressed as a different unit of length, e.g. cm instead of mm, such conversions should take place after computing the value using the standard units.

Volume (mesh)¶

RNU0

The mesh-based volume \(V\) is calculated from the ROI mesh as follows [Zhang2001]. A tetrahedron is formed by each face \(k\) and the origin. By placing the origin vertex of each tetrahedron at \((0,0,0)\), the signed volume of the tetrahedron is:

Here \(\mathbf{a}\), \(\mathbf{b}\) and \(\mathbf{c}\) are the vertex points of face \(k\). Depending on the orientation of the normal, the signed volume may be positive or negative. Hence, the orientation of face normals should be consistent, e.g. all normals must be either pointing outward or inward. The volume \(V\) is then calculated by summing over the face volumes, and taking the absolute value:

In positron emission tomography, the volume of the ROI commonly receives a name related to the radioactive tracer, e.g. metabolically active tumour volume (MATV) for 18F-FDG.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 556 | 4 | very strong |

| config. A | \(3.58 \times 10^{5}\) | \(5 \times 10^{3}\) | very strong |

| config. B | \(3.58 \times 10^{5}\) | \(5 \times 10^{3}\) | strong |

| config. C | \(3.67 \times 10^{5}\) | \(6 \times 10^{3}\) | strong |

| config. D | \(3.67 \times 10^{5}\) | \(6 \times 10^{3}\) | strong |

| config. E | \(3.67 \times 10^{5}\) | \(6 \times 10^{3}\) | strong |

Volume (voxel counting)¶

YEKZ

In clinical practice, volumes are commonly determined by counting voxels. For volumes consisting of a large number of voxels (1000s), the differences between voxel counting and mesh-based approaches are usually negligible. However for volumes with a low number of voxels (10s to 100s), voxel counting will overestimate volume compared to the mesh-based approach. It is therefore only used as a reference feature, and not in the calculation of other morphological features.

Voxel counting volume is defined as:

Here \(N_v\) is the number of voxels in the morphological mask of the ROI, and \(V_k\) the volume of voxel \(k\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 592 | 4 | very strong |

| config. A | \(3.59 \times 10^{5}\) | \(5 \times 10^{3}\) | strong |

| config. B | \(3.58 \times 10^{5}\) | \(5 \times 10^{3}\) | strong |

| config. C | \(3.68 \times 10^{5}\) | \(6 \times 10^{3}\) | strong |

| config. D | \(3.68 \times 10^{5}\) | \(6 \times 10^{3}\) | strong |

| config. E | \(3.68 \times 10^{5}\) | \(6 \times 10^{3}\) | strong |

Surface area (mesh)¶

C0JK

The surface area \(A\) is also calculated from the ROI mesh by summing over the triangular face surface areas [Aerts2014]. By definition, the area of face \(k\) is:

As in Fig. 9, edge \(\mathbf{ab}=\mathbf{b}-\mathbf{a}\) is the vector from vertex \(\mathbf{a}\) to vertex \(\mathbf{b}\), and edge \(\mathbf{ac}=\mathbf{c}-\mathbf{a}\) the vector from vertex \(\mathbf{a}\) to vertex \(\mathbf{c}\). The total surface area \(A\) is then:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 388 | 3 | very strong |

| config. A | \(3.57 \times 10^{4}\) | 300 | strong |

| config. B | \(3.37 \times 10^{4}\) | 300 | strong |

| config. C | \(3.43 \times 10^{4}\) | 400 | strong |

| config. D | \(3.43 \times 10^{4}\) | 400 | strong |

| config. E | \(3.43 \times 10^{4}\) | 400 | strong |

Surface to volume ratio¶

2PR5

The surface to volume ratio is given as [Aerts2014]:

Note that this feature is not dimensionless.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.698 | 0.004 | very strong |

| config. A | 0.0996 | 0.0005 | strong |

| config. B | 0.0944 | 0.0005 | strong |

| config. C | 0.0934 | 0.0007 | strong |

| config. D | 0.0934 | 0.0007 | strong |

| config. E | 0.0934 | 0.0007 | strong |

Compactness 1¶

SKGS

Several features (compactness 1 and 2, spherical disproportion, sphericity and asphericity) quantify the deviation of the ROI volume from a representative spheroid. All these definitions can be derived from one another. As a results these features are are highly correlated and may thus be redundant. Compactness 1 [Aerts2014] is a measure for how compact, or sphere-like the volume is. It is defined as:

Compactness 1 is sometimes [Aerts2014] defined using \(A^{2/3}\) instead of \(A^{3/2}\), but this does not lead to a dimensionless quantity.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.0411 | 0.0003 | strong |

| config. A | 0.03 | 0.0001 | strong |

| config. B | 0.0326 | 0.0001 | strong |

| config. C | — | — | moderate |

| config. D | 0.0326 | 0.0002 | strong |

| config. E | 0.0326 | 0.0002 | strong |

Compactness 2¶

BQWJ

Like Compactness 1, Compactness 2 [Aerts2014] quantifies how sphere-like the volume is:

By definition \(F_{\mathit{morph.comp.1}} = 1/6\pi \left(F_{\mathit{morph.comp.2}}\right)^{1/2}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.599 | 0.004 | strong |

| config. A | 0.319 | 0.001 | strong |

| config. B | 0.377 | 0.001 | strong |

| config. C | 0.378 | 0.004 | strong |

| config. D | 0.378 | 0.004 | strong |

| config. E | 0.378 | 0.004 | strong |

Spherical disproportion¶

KRCK

Spherical disproportion [Aerts2014] likewise describes how sphere-like the volume is:

By definition \(F_{\mathit{morph.sph.dispr}} = \left(F_{\mathit{morph.comp.2}}\right)^{-1/3}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.19 | 0.01 | strong |

| config. A | 1.46 | 0.01 | strong |

| config. B | 1.38 | 0.01 | strong |

| config. C | 1.38 | 0.01 | strong |

| config. D | 1.38 | 0.01 | strong |

| config. E | 1.38 | 0.01 | strong |

Sphericity¶

QCFX

Sphericity [Aerts2014] is a further measure to describe how sphere-like the volume is:

By definition \(F_{\mathit{morph.sphericity}} = \left(F_{\mathit{morph.comp.2}}\right)^{1/3}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.843 | 0.005 | very strong |

| config. A | 0.683 | 0.001 | strong |

| config. B | 0.722 | 0.001 | strong |

| config. C | 0.723 | 0.003 | strong |

| config. D | 0.723 | 0.003 | strong |

| config. E | 0.723 | 0.003 | strong |

Asphericity¶

25C7

Asphericity [Apostolova2014] also describes how much the ROI deviates from a perfect sphere, with perfectly spherical volumes having an asphericity of 0. Asphericity is defined as:

By definition \(F_{\mathit{morph.asphericity}} = \left(F_{\mathit{morph.comp.2}}\right)^{-1/3}-1\)

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.186 | 0.001 | strong |

| config. A | 0.463 | 0.002 | strong |

| config. B | 0.385 | 0.001 | moderate |

| config. C | 0.383 | 0.004 | strong |

| config. D | 0.383 | 0.004 | strong |

| config. E | 0.383 | 0.004 | strong |

Centre of mass shift¶

KLMA

The distance between the ROI volume centroid and the intensity-weighted ROI volume is an abstraction of the spatial distribution of low/high intensity regions within the ROI. Let \(N_{v,m}\) be the number of voxels in the morphological mask. The ROI volume centre of mass is calculated from the morphological voxel point set \(\mathbf{X}_{c}\) as follows:

The intensity-weighted ROI volume is based on the intensity mask. The position of each voxel centre in the intensity mask voxel set \(\mathbf{X}_{c,gl}\) is weighted by its corresponding intensity \(\mathbf{X}_{gl}\). Therefore, with \(N_{v,gl}\) the number of voxels in the intensity mask:

The distance between the two centres of mass is then:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.672 | 0.004 | very strong |

| config. A | 52.9 | 28.7 | strong |

| config. B | 63.1 | 29.6 | strong |

| config. C | 45.6 | 2.8 | strong |

| config. D | 64.9 | 2.8 | strong |

| config. E | 68.5 | 2.1 | moderate |

Maximum 3D diameter¶

L0JK

The maximum 3D diameter [Aerts2014] is the distance between the two most distant vertices in the ROI mesh vertex set \(\mathbf{X}_{vx}\):

A practical way of determining the maximum 3D diameter is to first construct the convex hull of the ROI mesh. The convex hull vertex set \(\mathbf{X}_{vx,convex}\) is guaranteed to contain the two most distant vertices of \(\mathbf{X}_{vx}\). This significantly reduces the computational cost of calculating distances between all vertices. Despite the remaining \(O(n^2)\) cost of calculating distances between different vertices, \(\mathbf{X}_{vx,convex}\) is usually considerably smaller in size than \(\mathbf{X}_{vx}\). Moreover, the convex hull is later used for the calculation of other morphological features (Volume density (convex hull) - Area density (convex hull)).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 13.1 | 0.1 | strong |

| config. A | 125 | 1 | strong |

| config. B | 125 | 1 | strong |

| config. C | 125 | 1 | strong |

| config. D | 125 | 1 | strong |

| config. E | 125 | 1 | strong |

Major axis length¶

TDIC

Principal component analysis (PCA) can be used to determine the main orientation of the ROI [Solomon2011]. On a three dimensional object, PCA yields three orthogonal eigenvectors \(\left\lbrace e_1,e_2,e_3\right\rbrace\) and three eigenvalues \(\left( \lambda_1, \lambda_2, \lambda_3\right)\). These eigenvalues and eigenvectors geometrically describe a triaxial ellipsoid. The three eigenvectors determine the orientation of the ellipsoid, whereas the eigenvalues provide a measure of how far the ellipsoid extends along each eigenvector. Several features make use of principal component analysis, namely major, minor and least axis length, elongation, flatness, and approximate enclosing ellipsoid volume and area density.

The eigenvalues can be ordered so that \(\lambda_{\mathit{major}} \geq \lambda_{\mathit{minor}}\geq \lambda_{\mathit{least}}\) correspond to the major, minor and least axes of the ellipsoid respectively. The semi-axes lengths \(a\), \(b\) and \(c\) for the major, minor and least axes are then \(2\sqrt{\lambda_{\mathit{major}}}\), \(2\sqrt{\lambda_{\mathit{minor}}}\) and \(2\sqrt{\lambda_{\mathit{least}}}\) respectively. The major axis length is twice the semi-axis length \(a\), determined using the largest eigenvalue obtained by PCA on the point set of voxel centers \(\mathbf{X}_{c}\) [Heiberger2015]:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 11.4 | 0.1 | very strong |

| config. A | 92.7 | 0.4 | very strong |

| config. B | 92.6 | 0.4 | strong |

| config. C | 93.3 | 0.5 | strong |

| config. D | 93.3 | 0.5 | strong |

| config. E | 93.3 | 0.5 | strong |

Minor axis length¶

P9VJ

The minor axis length of the ROI provides a measure of how far the volume extends along the second largest axis. The minor axis length is twice the semi-axis length \(b\), determined using the second largest eigenvalue obtained by PCA, as described in Section Major axis length.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 9.31 | 0.06 | very strong |

| config. A | 81.5 | 0.4 | very strong |

| config. B | 81.3 | 0.4 | strong |

| config. C | 82 | 0.5 | strong |

| config. D | 82 | 0.5 | strong |

| config. E | 82 | 0.5 | strong |

Least axis length¶

7J51

The least axis is the axis along which the object is least extended. The least axis length is twice the semi-axis length \(c\), determined using the smallest eigenvalue obtained by PCA, as described in Section Major axis length.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 8.54 | 0.05 | very strong |

| config. A | 70.1 | 0.3 | strong |

| config. B | 70.2 | 0.3 | strong |

| config. C | 70.9 | 0.4 | strong |

| config. D | 70.9 | 0.4 | strong |

| config. E | 70.9 | 0.4 | strong |

Elongation¶

Q3CK

The ratio of the major and minor principal axis lengths could be viewed as the extent to which a volume is longer than it is wide, i.e. is eccentric. For computational reasons, we express elongation as an inverse ratio. 1 is thus completely non-elongated, e.g. a sphere, and smaller values express greater elongation of the ROI volume.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.816 | 0.005 | very strong |

| config. A | 0.879 | 0.001 | strong |

| config. B | 0.878 | 0.001 | strong |

| config. C | 0.879 | 0.001 | strong |

| config. D | 0.879 | 0.001 | strong |

| config. E | 0.879 | 0.001 | strong |

Flatness¶

N17B

The ratio of the major and least axis lengths could be viewed as the extent to which a volume is flat relative to its length. For computational reasons, we express flatness as an inverse ratio. 1 is thus completely non-flat, e.g. a sphere, and smaller values express objects which are increasingly flatter.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.749 | 0.005 | very strong |

| config. A | 0.756 | 0.001 | strong |

| config. B | 0.758 | 0.001 | strong |

| config. C | 0.76 | 0.001 | strong |

| config. D | 0.76 | 0.001 | strong |

| config. E | 0.76 | 0.001 | strong |

Volume density (axis-aligned bounding box)¶

PBX1

Volume density is the fraction of the ROI volume and a comparison volume. Here the comparison volume is that of the axis-aligned bounding box (AABB) of the ROI mesh vertex set \(\mathbf{X}_{vx}\) or the ROI mesh convex hull vertex set \(\mathbf{X}_{vx,convex}\). Both vertex sets generate an identical bounding box, which is the smallest box enclosing the vertex set, and aligned with the axes of the reference frame.

This feature is also called extent [ElNaqa2009][Solomon2011].

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.869 | 0.005 | strong |

| config. A | 0.486 | 0.003 | strong |

| config. B | 0.477 | 0.003 | strong |

| config. C | 0.478 | 0.003 | strong |

| config. D | 0.478 | 0.003 | strong |

| config. E | 0.478 | 0.003 | strong |

Area density (axis-aligned bounding box)¶

R59B

Conceptually similar to the volume density (AABB) feature, area density considers the ratio of the ROI surface area and the surface area \(A_{aabb}\) of the axis-aligned bounding box enclosing the ROI mesh vertex set \(\mathbf{X}_{vx}\) [VanDijk2016]. The bounding box is identical to the one used for computing the volume density (AABB) feature. Thus:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.866 | 0.005 | strong |

| config. A | 0.725 | 0.003 | strong |

| config. B | 0.678 | 0.003 | strong |

| config. C | 0.678 | 0.003 | strong |

| config. D | 0.678 | 0.003 | strong |

| config. E | 0.678 | 0.003 | strong |

Volume density (oriented minimum bounding box)¶

ZH1A

Note: This feature currently has no reference values and should not be used.

The volume of an axis-aligned bounding box is generally not the smallest obtainable volume enclosing the ROI. By orienting the box along a different set of axes, a smaller enclosing volume may be attainable. The oriented minimum bounding box (OMBB) of the ROI mesh vertex set \(\mathbf{X}_{vx}\) or \(\mathbf{X}_{vx,convex}\) encloses the vertex set and has the smallest possible volume. A 3D rotating callipers technique was devised by [ORourke1985] to derive the oriented minimum bounding box. Due to computational complexity of this technique, the oriented minimum bounding box is commonly approximated at lower complexity, see e.g. [Barequet2001] and [Chan2001]. Thus:

Here \(V_{ombb}\) is the volume of the oriented minimum bounding box.

Area density (oriented minimum bounding box)¶

IQYR

Note: This feature currently has no reference values and should not be used.

The area density (OMBB) is estimated as:

Here \(A_{ombb}\) is the surface area of the same bounding box as calculated for the volume density (OMBB) feature.

Volume density (approximate enclosing ellipsoid)¶

6BDE

The eigenvectors and eigenvalues from PCA of the ROI voxel center point set \(\mathbf{X}_{c}\) can be used to describe an ellipsoid approximating the point cloud [Mazurowski2016], i.e. the approximate enclosing ellipsoid (AEE). The volume of this ellipsoid is \(V_{\mathit{aee}}=4 \pi\,a\,b\,c /3\), with \(a\), \(b\), and \(c\) being the lengths of the ellipsoid’s semi-principal axes, see Section Major axis length. The volume density (AEE) is then:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.17 | 0.01 | moderate |

| config. A | 1.29 | 0.01 | strong |

| config. B | 1.29 | 0.01 | strong |

| config. C | 1.29 | 0.01 | moderate |

| config. D | 1.29 | 0.01 | moderate |

| config. E | 1.29 | 0.01 | strong |

Area density (approximate enclosing ellipsoid)¶

RDD2

The surface area of an ellipsoid can generally not be evaluated in an elementary form. However, it is possible to approximate the surface using an infinite series. We use the same semi-principal axes as for the volume density (AEE) feature and define:

Here \(\alpha=\sqrt{1-b^2/a^2}\) and \(\beta=\sqrt{1-c^2/a^2}\) are eccentricities of the ellipsoid and \(P_{\nu}\) is the Legendre polynomial function for degree \(\nu\). The Legendre polynomial series, though infinite, converges, and approximation may be stopped early when the incremental gains in precision become limited. By default, we stop the series after \(\nu=20\).

The area density (AEE) is then approximated as:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.36 | 0.01 | moderate |

| config. A | 1.71 | 0.01 | moderate |

| config. B | 1.62 | 0.01 | moderate |

| config. C | 1.62 | 0.01 | moderate |

| config. D | 1.62 | 0.01 | moderate |

| config. E | 1.62 | 0.01 | strong |

Volume density (minimum volume enclosing ellipsoid)¶

SWZ1

Note: This feature currently has no reference values and should not be used.

The minimum volume enclosing ellipsoid (MVEE), unlike the approximate enclosing ellipsoid, is the smallest ellipsoid that encloses the ROI. Direct computation of the MVEE is usually unfeasible, and is therefore approximated. Various approximation algorithms have been described, e.g. [Todd2007][Ahipasaoglu2015], which are usually elaborations on Khachiyan’s barycentric coordinate descent method [Khachiyan1996].

The MVEE encloses the ROI mesh vertex set \(\mathbf{X}_{vx}\), and by definition \(\mathbf{X}_{vx,convex}\) as well. Use of the convex mesh set \(\mathbf{X}_{vx,convex}\) is recommended due to its sparsity compared to the full vertex set. The volume of the MVEE is defined by its semi-axes lengths \(V_{\mathit{mvee}}=4 \pi\,a\,b\,c /3\). Then:

For Khachiyan’s barycentric coordinate descent-based methods we use a default tolerance \(\tau=0.001\) as stopping criterion.

Area density (minimum volume enclosing ellipsoid)¶

BRI8

Note: This feature currently has no reference values and should not be used.

The surface area of an ellipsoid does not have a general elementary form, but should be approximated as noted in Section Area density (approximate enclosing ellipsoid). Let the approximated surface area of the MVEE be \(A_{\mathit{mvee}}\). Then:

Volume density (convex hull)¶

R3ER

The convex hull encloses ROI mesh vertex set \(\mathbf{X}_{vx}\) and consists of the vertex set \(\mathbf{X}_{vx,convex}\) and corresponding faces, see section Maximum 3D diameter. The volume of the ROI mesh convex hull set \(V_{convex}\) is computed in the same way as that of the volume (mesh) feature (Volume (mesh)). The volume density can then be calculated as follows:

This feature is also called solidity [ElNaqa2009][Solomon2011].

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.961 | 0.006 | strong |

| config. A | 0.827 | 0.001 | moderate |

| config. B | 0.829 | 0.001 | moderate |

| config. C | 0.834 | 0.002 | moderate |

| config. D | 0.834 | 0.002 | moderate |

| config. E | 0.834 | 0.002 | moderate |

Area density (convex hull)¶

7T7F

The area of the convex hull \(A_{convex}\) is the sum of the areas of the faces of the convex hull, and is computed in the same way as the surface area (mesh) feature (Surface area (mesh) section). The convex hull is identical to the one used in the volume density (convex hull) feature. Then:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.03 | 0.01 | strong |

| config. A | 1.18 | 0.01 | moderate |

| config. B | 1.12 | 0.01 | moderate |

| config. C | 1.13 | 0.01 | moderate |

| config. D | 1.13 | 0.01 | moderate |

| config. E | 1.13 | 0.01 | moderate |

Integrated intensity¶

99N0

Integrated intensity is the average intensity in the ROI, multiplied by the volume. In the context of 18F-FDG-PET, this feature is often called total lesion glycolysis [Vaidya2012]. Thus:

\(N_{v,gl}\) is the number of voxels in the ROI intensity mask.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | \(1.2 \times 10^{3}\) | 10 | moderate |

| config. A | \(4.81 \times 10^{6}\) | \(3.2 \times 10^{5}\) | strong |

| config. B | \(4.12 \times 10^{6}\) | \(3.2 \times 10^{5}\) | strong |

| config. C | \(-1.8 \times 10^{7}\) | \(1.4 \times 10^{6}\) | strong |

| config. D | \(-8.64 \times 10^{6}\) | \(1.56 \times 10^{6}\) | strong |

| config. E | \(-8.31 \times 10^{6}\) | \(1.6 \times 10^{6}\) | strong |

Moran’s I index¶

N365

Moran’s I index is an indicator of spatial autocorrelation [Moran1950][Dale2002]. It is defined as:

As before \(N_{v,gl}\) is the number of voxels in the ROI intensity mask, \(\mu\) is the mean of \(\mathbf{X}_{gl}\) and \(w_{k_{1}k_{2}}\) is a weight factor, equal to the inverse Euclidean distance between voxels \(k_{1}\) and \(k_{2}\) of the point set \(\mathbf{X}_{c,gl}\) of the ROI intensity mask [DaSilva2008]. Values of Moran’s I close to 1.0, 0.0 and -1.0 indicate high spatial autocorrelation, no spatial autocorrelation and high spatial anti-autocorrelation, respectively.

Note that for an ROI containing many voxels, calculating Moran’s I index may be computationally expensive due to \(O(n^2)\) behaviour. Approximation by repeated subsampling of the ROI may be required to make the calculation tractable, at the cost of accuracy.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.0397 | 0.0003 | strong |

| config. A | 0.0322 | 0.0002 | moderate |

| config. B | 0.0329 | 0.0001 | moderate |

| config. C | 0.0824 | 0.0003 | moderate |

| config. D | 0.0622 | 0.0013 | moderate |

| config. E | 0.0596 | 0.0014 | moderate |

Geary’s C measure¶

NPT7

Geary’s C measure assesses spatial autocorrelation, similar to Moran’s I index [Geary1954][Dale2002]. In contrast to Moran’s I index, Geary’s C measure directly assesses intensity differences between voxels and is more sensitive to local spatial autocorrelation. This measure is defined as:

As with Moran’s I, \(N_{v,gl}\) is the number of voxels in the ROI intensity mask, \(\mu\) is the mean of \(\mathbf{X}_{gl}\) and \(w_{k_{1}k_{2}}\) is a weight factor, equal to the inverse Euclidean distance between voxels \(k_{1}\) and \(k_{2}\) of the ROI voxel point set \(\mathbf{X}_{c,gl}\) [DaSilva2008].

Just as Moran’s I, Geary’s C measure exhibits \(O(n^2)\) behaviour and an approximation scheme may be required to make calculation feasible for large ROIs.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.974 | 0.006 | strong |

| config. A | 0.863 | 0.001 | moderate |

| config. B | 0.862 | 0.001 | moderate |

| config. C | 0.846 | 0.001 | moderate |

| config. D | 0.851 | 0.001 | moderate |

| config. E | 0.853 | 0.001 | moderate |

Local intensity features¶

9ST6

Voxel intensities within a defined neighbourhood around a center voxel are used to compute local intensity features. Unlike many other feature sets, local features do not draw solely on intensities within the ROI. While only voxels within the ROI intensity map can be used as a center voxel, the local neighbourhood draws upon all voxels regardless of being in an ROI.

Aggregating features¶

By definition, local intensity features are calculated in 3D (DHQ4), and not per slice.

Local intensity peak¶

VJGA

The local intensity peak was originally devised for reducing variance in determining standardised uptake values [Wahl2009]. It is defined as the mean intensity in a 1 cm3 spherical volume (in world coordinates), which is centered on the voxel with the maximum intensity level in the ROI intensity mask [Frings2014].

To calculate \(F_{\mathit{loc.peak.local}}\), we first select all the voxels with centers within a radius \(r=\left(\frac{3}{4 \pi}\right)^{1/3} \approx 0.62\) cm of the center of the maximum intensity voxel. Subsequently, the mean intensity of the selected voxels, including the center voxel, are calculated.

In case the maximum intensity is found in multiple voxels within the ROI, local intensity peak is calculated for each of these voxels, and the highest local intensity peak is chosen.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 2.6 | — | strong |

| config. A | \(-\)277 | 10 | moderate |

| config. B | 178 | 10 | moderate |

| config. C | 169 | 10 | moderate |

| config. D | 201 | 10 | strong |

| config. E | 181 | 13 | moderate |

Global intensity peak¶

0F91

The global intensity peak feature \(F_{\mathit{loc.peak.global}}\) is similar to the local intensity peak [Frings2014]. However, instead of calculating the mean intensity for the voxel(s) with the maximum intensity, the mean intensity is calculated within a 1 cm3 neighbourhood for every voxel in the ROI intensity mask. The highest intensity peak value is then selected.

Calculation of the global intensity peak feature may be accelerated by construction and application of an appropriate spatial spherical mean convolution filter, due to the convolution theorem. In this case one would first construct an empty 3D filter that will fit a 1 cm3 sphere. Within this context, the filter voxels may be represented by a point set, akin to \(\mathbf{X}_{c}\) in Morphological features. Euclidean distances in world spacing between the central voxel of the filter and every remaining voxel are computed. If this distance lies within radius \(r=\left(\frac{3}{4 \pi}\right)^{1/3} \approx 0.62\) the corresponding voxel receives a label \(1\), and \(0\) otherwise. Subsequent summation of the voxel labels yields \(N_s\), the number of voxels within the 1 cm3 sphere. The filter then becomes a spherical mean filter by dividing the labels by \(N_s\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3.1 | — | strong |

| config. A | 189 | 5 | moderate |

| config. B | 178 | 5 | moderate |

| config. C | 180 | 5 | moderate |

| config. D | 201 | 5 | moderate |

| config. E | 181 | 5 | moderate |

Intensity-based statistical features¶

UHIW

The intensity-based statistical features describe how intensities within the region of interest (ROI) are distributed. The features in this set do not require discretisation, and may be used to describe a continuous intensity distribution. Intensity-based statistical features are not meaningful if the intensity scale is arbitrary.

The set of intensities of the \(N_v\) voxels included in the ROI intensity mask is denoted as \(\mathbf{X}_{gl}=\left\lbrace X_{gl,1},X_{gl,2},\ldots,X_{gl,N_v}\right\rbrace\).

Aggregating features¶

We recommend calculating intensity-based statistical features using the 3D volume (DHQ4). An approach that computes intensity-based statistical features per slice and subsequently averages them (3IDG) is not recommended.

Mean intensity¶

Q4LE

The mean intensity of \(\mathbf{X}_{gl}\) is calculated as:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 2.15 | — | very strong |

| config. A | 13.4 | 1.1 | very strong |

| config. B | 11.5 | 1.1 | strong |

| config. C | \(-\)49 | 2.9 | very strong |

| config. D | \(-\)23.5 | 3.9 | strong |

| config. E | \(-\)22.6 | 4.1 | strong |

Intensity variance¶

ECT3

The intensity variance of \(\mathbf{X}_{gl}\) is defined as:

Note that we do not apply a bias correction when computing the variance.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3.05 | — | very strong |

| config. A | \(1.42 \times 10^{4}\) | 400 | very strong |

| config. B | \(1.44 \times 10^{4}\) | 400 | very strong |

| config. C | \(5.06 \times 10^{4}\) | \(1.4 \times 10^{3}\) | strong |

| config. D | \(3.28 \times 10^{4}\) | \(2.1 \times 10^{3}\) | strong |

| config. E | \(3.51 \times 10^{4}\) | \(2.2 \times 10^{3}\) | strong |

Intensity skewness¶

KE2A

The skewness of the intensity distribution of \(\mathbf{X}_{gl}\) is defined as:

Here \(\mu=F_{\mathit{stat.mean}}\). If the intensity variance \(F_{\mathit{stat.var}} = 0\), \(F_{\mathit{stat.skew}}=0\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.08 | — | very strong |

| config. A | \(-\)2.47 | 0.05 | very strong |

| config. B | \(-\)2.49 | 0.05 | very strong |

| config. C | \(-\)2.14 | 0.05 | very strong |

| config. D | \(-\)2.28 | 0.06 | strong |

| config. E | \(-\)2.3 | 0.07 | strong |

(Excess) intensity kurtosis¶

IPH6

Kurtosis, or technically excess kurtosis, is a measure of peakedness in the intensity distribution \(\mathbf{X}_{gl}\):

Here \(\mu=F_{\mathit{stat.mean}}\). Note that kurtosis is corrected by a Fisher correction of -3 to center it on 0 for normal distributions. If the intensity variance \(F_{\mathit{stat.var}} = 0\), \(F_{\mathit{stat.kurt}}=0\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | \(-\)0.355 | — | very strong |

| config. A | 5.96 | 0.24 | very strong |

| config. B | 5.93 | 0.24 | very strong |

| config. C | 3.53 | 0.23 | very strong |

| config. D | 4.35 | 0.32 | strong |

| config. E | 4.44 | 0.33 | strong |

Median intensity¶

Y12H

The median intensity \(F_{\mathit{stat.median}}\) is the sample median of \(\mathbf{X}_{gl}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | 46 | 0.3 | very strong |

| config. B | 45 | 0.3 | strong |

| config. C | 40 | 0.4 | strong |

| config. D | 42 | 0.4 | strong |

| config. E | 43 | 0.5 | strong |

Minimum intensity¶

1GSF

The minimum intensity is equal to the lowest intensity present in \(\mathbf{X}_{gl}\), i.e:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | \(-\)500 | — | very strong |

| config. B | \(-\)500 | — | very strong |

| config. C | \(-\)939 | 4 | strong |

| config. D | \(-\)724 | 12 | strong |

| config. E | \(-\)743 | 13 | strong |

10th intensity percentile¶

QG58

\(P_{10}\) is the 10th percentile of \(\mathbf{X}_{gl}\). \(P_{10}\) is a more robust alternative to the minimum intensity.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | \(-\)129 | 8 | strong |

| config. B | \(-\)136 | 8 | strong |

| config. C | \(-\)424 | 14 | very strong |

| config. D | \(-\)304 | 20 | strong |

| config. E | \(-\)310 | 21 | strong |

90th intensity percentile¶

8DWT

\(P_{90}\) is the 90th percentile of \(\mathbf{X}_{gl}\). \(P_{90}\) is a more robust alternative to the maximum intensity.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 4 | — | very strong |

| config. A | 95 | — | strong |

| config. B | 91 | — | strong |

| config. C | 86 | 0.1 | strong |

| config. D | 86 | 0.1 | strong |

| config. E | 93 | 0.2 | strong |

Note that the 90:sup:`th` intensity percentile obtained for the digital phantom may differ from the above reference value depending on the software implementation used to compute it. For example, some implementations were found to produce a value of 4.2 instead of 4.

Maximum intensity¶

84IY

The maximum intensity is equal to the highest intensity present in \(\mathbf{X}_{gl}\), i.e:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 6 | — | very strong |

| config. A | 377 | 9 | very strong |

| config. B | 391 | 9 | strong |

| config. C | 393 | 10 | very strong |

| config. D | 521 | 22 | strong |

| config. E | 345 | 9 | strong |

Intensity interquartile range¶

SALO

The interquartile range (IQR) of \(\mathbf{X}_{gl}\) is defined as:

\(P_{25}\) and \(P_{75}\) are the 25th and 75th percentiles of \(\mathbf{X}_{gl}\), respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3 | — | very strong |

| config. A | 56 | 0.5 | very strong |

| config. B | 52 | 0.5 | strong |

| config. C | 67 | 4.9 | very strong |

| config. D | 57 | 4.1 | strong |

| config. E | 62 | 3.5 | strong |

Intensity range¶

2OJQ

The intensity range is defined as:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 5 | — | very strong |

| config. A | 877 | 9 | very strong |

| config. B | 891 | 9 | strong |

| config. C | \(1.33 \times 10^{3}\) | 20 | strong |

| config. D | \(1.24 \times 10^{3}\) | 40 | strong |

| config. E | \(1.09 \times 10^{3}\) | 30 | strong |

Intensity-based mean absolute deviation¶

4FUA

Mean absolute deviation is a measure of dispersion from the mean of \(\mathbf{X}_{gl}\):

Here \(\mu=F_{\mathit{stat.mean}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.55 | — | very strong |

| config. A | 73.6 | 1.4 | very strong |

| config. B | 74.4 | 1.4 | strong |

| config. C | 158 | 4 | very strong |

| config. D | 123 | 6 | strong |

| config. E | 125 | 6 | strong |

Intensity-based robust mean absolute deviation¶

1128

The intensity-based mean absolute deviation feature may be influenced by outliers. To increase robustness, the set of intensities can be restricted to those which lie closer to the center of the distribution. Let

Then \(\mathbf{X}_{gl,10-90}\) is the set of \(N_{v,10-90}\leq N_v\) voxels in \(\mathbf{X}_{gl}\) whose intensities fall in the interval bounded by the 10th and 90th percentiles of \(\mathbf{X}_{gl}\). The robust mean absolute deviation is then:

\(\overline{X}_{gl,10-90}\) denotes the sample mean of \(\mathbf{X_{gl,10-90}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.11 | — | very strong |

| config. A | 27.7 | 0.8 | very strong |

| config. B | 27.3 | 0.8 | strong |

| config. C | 66.8 | 3.5 | very strong |

| config. D | 46.8 | 3.6 | strong |

| config. E | 46.5 | 3.7 | strong |

Intensity-based median absolute deviation¶

N72L

Median absolute deviation is similar in concept to the intensity-based mean absolute deviation, but measures dispersion from the median intensity instead of the mean intensity. Thus:

Here, median \(M = F_{\mathit{stat.median}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.15 | — | very strong |

| config. A | 64.3 | 1 | strong |

| config. B | 63.8 | 1 | strong |

| config. C | 119 | 4 | strong |

| config. D | 94.7 | 3.8 | strong |

| config. E | 97.9 | 3.9 | strong |

Intensity-based coefficient of variation¶

7TET

The coefficient of variation measures the dispersion of \(\mathbf{X}_{gl}\). It is defined as:

Here \(\sigma={F_{\mathit{stat.var}}}^{1/2}\) and \(\mu=F_{\mathit{stat.mean}}\) are the standard deviation and mean of the intensity distribution, respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.812 | — | very strong |

| config. A | 8.9 | 4.98 | strong |

| config. B | 10.4 | 5.2 | strong |

| config. C | \(-\)4.59 | 0.29 | strong |

| config. D | \(-\)7.7 | 1.01 | strong |

| config. E | \(-\)8.28 | 0.95 | strong |

Intensity-based quartile coefficient of dispersion¶

9S40

The quartile coefficient of dispersion is a more robust alternative to the intensity-based coefficient of variance. It is defined as:

\(P_{25}\) and \(P_{75}\) are the 25th and 75th percentile of \(\mathbf{X}_{gl}\), respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.6 | — | very strong |

| config. A | 0.636 | 0.008 | strong |

| config. B | 0.591 | 0.008 | strong |

| config. C | 1.03 | 0.4 | strong |

| config. D | 0.74 | 0.011 | strong |

| config. E | 0.795 | 0.337 | strong |

Intensity-based energy¶

N8CA

The energy [Aerts2014] of \(\mathbf{X}_{gl}\) is defined as:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 567 | — | very strong |

| config. A | \(1.65 \times 10^{9}\) | \(2 \times 10^{7}\) | very strong |

| config. B | \(3.98 \times 10^{8}\) | \(1.1 \times 10^{7}\) | strong |

| config. C | \(2.44 \times 10^{9}\) | \(1.2 \times 10^{8}\) | strong |

| config. D | \(1.48 \times 10^{9}\) | \(1.4 \times 10^{8}\) | strong |

| config. E | \(1.58 \times 10^{9}\) | \(1.4 \times 10^{8}\) | strong |

Root mean square intensity¶

5ZWQ

The root mean square intensity feature [Aerts2014], which is also called the quadratic mean, of \(\mathbf{X}_{gl}\) is defined as:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 2.77 | — | very strong |

| config. A | 120 | 2 | very strong |

| config. B | 121 | 2 | strong |

| config. C | 230 | 4 | strong |

| config. D | 183 | 7 | strong |

| config. E | 189 | 7 | strong |

Intensity histogram features¶

ZVCW

An intensity histogram is generated by discretising the original intensity distribution \(\mathbf{X}_{gl}\) into intensity bins. Approaches to discretisation are described in Section Intensity discretisation.

Let \(\mathbf{X}_{d}=\left\lbrace X_{d,1},X_{d,2},\ldots,X_{d,N_v}\right\rbrace\) be the set of \(N_g\) discretised intensities of the \(N_v\) voxels in the ROI intensity mask. Let \(\mathbf{H}=\left\lbrace n_1, n_2,\ldots, n_{N_g}\right\rbrace\) be the histogram with frequency count \(n_i\) of each discretised intensity \(i\) in \(\mathbf{X}_{d}\). The occurrence probability \(p_i\) for each discretised intensity \(i\) is then approximated as \(p_i=n_i/N_v\).

Aggregating features¶

We recommend calculating intensity histogram features using the 3D volume (DHQ4). An approach that computes features per slice and subsequently averages (3IDG) is not recommended.

Mean discretised intensity¶

X6K6

The mean [Aerts2014] of \(\mathbf{X}_{d}\) is calculated as:

An equivalent definition is:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 2.15 | — | very strong |

| config. A | 21.1 | 0.1 | strong |

| config. B | 18.9 | 0.3 | strong |

| config. C | 38.6 | 0.2 | strong |

| config. D | 18.5 | 0.5 | strong |

| config. E | 21.7 | 0.3 | strong |

Discretised intensity variance¶

CH89

The variance [Aerts2014] of \(\mathbf{X}_{d}\) is defined as:

Here \(\mu=F_{\mathit{ih.mean}}\). This definition is equivalent to:

Note that no bias-correction is applied when computing the variance.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3.05 | — | strong |

| config. A | 22.8 | 0.6 | strong |

| config. B | 18.7 | 0.2 | strong |

| config. C | 81.1 | 2.1 | strong |

| config. D | 21.7 | 0.4 | strong |

| config. E | 30.4 | 0.8 | strong |

Discretised intensity skewness¶

88K1

The skewness [Aerts2014] of \(\mathbf{X}_{d}\) is defined as:

Here \(\mu=F_{\mathit{ih.mean}}\). This definition is equivalent to:

If the discretised intensity variance \(F_{\mathit{ih.var}} = 0\), \(F_{\mathit{ih.skew}}=0\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.08 | — | very strong |

| config. A | \(-\)2.46 | 0.05 | strong |

| config. B | \(-\)2.47 | 0.05 | strong |

| config. C | \(-\)2.14 | 0.05 | strong |

| config. D | \(-\)2.27 | 0.06 | strong |

| config. E | \(-\)2.29 | 0.07 | strong |

(Excess) discretised intensity kurtosis¶

C3I7

Kurtosis [Aerts2014], or technically excess kurtosis, measures the peakedness of the \(\mathbf{X}_{d}\) distribution:

Here \(\mu=F_{\mathit{ih.mean}}\). An alternative, but equivalent, definition is:

Note that kurtosis is corrected by a Fisher correction of -3 to center kurtosis on 0 for normal distributions. If the discretised intensity variance \(F_{\mathit{ih.var}} = 0\), \(F_{\mathit{ih.kurt}}=0\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | \(-\)0.355 | — | very strong |

| config. A | 5.9 | 0.24 | strong |

| config. B | 5.84 | 0.24 | strong |

| config. C | 3.52 | 0.23 | strong |

| config. D | 4.31 | 0.32 | strong |

| config. E | 4.4 | 0.33 | strong |

Median discretised intensity¶

WIFQ

The median \(F_{\mathit{ih.median}}\) is the sample median of \(\mathbf{X}_{d}\) [Aerts2014].

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | 22 | — | strong |

| config. B | 20 | 0.3 | strong |

| config. C | 42 | — | strong |

| config. D | 20 | 0.5 | strong |

| config. E | 24 | 0.2 | strong |

Minimum discretised intensity¶

1PR8

The minimum discretised intensity [Aerts2014] is equal to the lowest discretised intensity present in \(\mathbf{X}_{d}\), i.e.:

For fixed bin number discretisation \(F_{\mathit{ih.min}}=1\) by definition, but \(F_{\mathit{ih.min}}>1\) is possible for fixed bin size discretisation.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | 1 | — | strong |

| config. B | 1 | — | strong |

| config. C | 3 | 0.16 | strong |

| config. D | 1 | — | strong |

| config. E | 1 | — | strong |

10th discretised intensity percentile¶

1PR8

\(P_{10}\) is the 10th percentile of \(\mathbf{X}_{d}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | 15 | 0.4 | strong |

| config. B | 14 | 0.5 | strong |

| config. C | 24 | 0.7 | strong |

| config. D | 11 | 0.7 | strong |

| config. E | 13 | 0.7 | strong |

90th discretised intensity percentile¶

GPMT

\(P_{90}\) is the 90th percentile of \(\mathbf{X}_{d}\) and is defined as \(F_{\mathit{ih.P90}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 4 | — | strong |

| config. A | 24 | — | strong |

| config. B | 22 | 0.3 | strong |

| config. C | 44 | — | strong |

| config. D | 21 | 0.5 | strong |

| config. E | 25 | 0.2 | strong |

Note that the 90th discretised intensity percentile obtained for the digital phantom may differ from the above reference value depending on the software implementation used to compute it. For example, some implementations were found to produce a value of 4.2 instead of 4 for this feature.

Maximum discretised intensity¶

3NCY

The maximum discretised intensity [Aerts2014] is equal to the highest discretised intensity present in \(\mathbf{X}_{d}\), i.e.:

By definition, \(F_{\mathit{ih.max}}=N_g\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 6 | — | very strong |

| config. A | 36 | 0.4 | strong |

| config. B | 32 | — | strong |

| config. C | 56 | 0.5 | strong |

| config. D | 32 | — | strong |

| config. E | 32 | — | strong |

Intensity histogram mode¶

AMMC

The mode of \(\mathbf{X}_{d}\) \(F_{\mathit{ih.mode}}\) is the most common discretised intensity present, i.e. the value \(i\) for with the highest count \(n_i\). The mode may not be uniquely defined. When the highest count is found in multiple bins, the value \(i\) of the bin closest to the mean discretised intensity is chosen as intensity histogram mode. In pathological cases with two such bins equidistant to the mean, the bin to the left of the mean is selected.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | very strong |

| config. A | 23 | — | strong |

| config. B | 20 | 0.3 | strong |

| config. C | 43 | 0.1 | strong |

| config. D | 20 | 0.4 | strong |

| config. E | 24 | 0.1 | strong |

Discretised intensity interquartile range¶

WR0O

The interquartile range (IQR) of \(\mathbf{X}_{d}\) is defined as:

\(P_{25}\) and \(P_{75}\) are the 25th and 75th percentile of \(\mathbf{X}_{d}\), respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3 | — | very strong |

| config. A | 2 | — | strong |

| config. B | 2 | — | strong |

| config. C | 3 | 0.21 | strong |

| config. D | 2 | 0.06 | strong |

| config. E | 1 | 0.06 | strong |

Discretised intensity range¶

5Z3W

The discretised intensity range [Aerts2014] is defined as:

For fixed bin number discretisation, the discretised intensity range equals \(N_g\) by definition.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 5 | — | very strong |

| config. A | 35 | 0.4 | strong |

| config. B | 31 | — | strong |

| config. C | 53 | 0.6 | strong |

| config. D | 31 | — | strong |

| config. E | 31 | — | strong |

Intensity histogram mean absolute deviation¶

D2ZX

The mean absolute deviation [Aerts2014] is a measure of dispersion from the mean of \(\mathbf{X}_{d}\):

Here \(\mu=F_{\mathit{ih.mean}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.55 | — | very strong |

| config. A | 2.94 | 0.06 | strong |

| config. B | 2.67 | 0.03 | strong |

| config. C | 6.32 | 0.15 | strong |

| config. D | 3.15 | 0.05 | strong |

| config. E | 3.69 | 0.1 | strong |

Intensity histogram robust mean absolute deviation¶

WRZB

Intensity histogram mean absolute deviation may be affected by outliers. To increase robustness, the set of discretised intensities under consideration can be restricted to those which are closer to the center of the distribution. Let

In short, \(\mathbf{X}_{d,10-90}\) is the set of \(N_{v,10-90}\leq N_v\) voxels in \(\mathbf{X}_{d}\) whose discretised intensities fall in the interval bounded by the 10th and 90th percentiles of \(\mathbf{X}_{d}\). The robust mean absolute deviation is then:

\(\overline{X}_{d,10-90}\) denotes the sample mean of \(\mathbf{X}_{d,10-90}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.11 | — | very strong |

| config. A | 1.18 | 0.04 | strong |

| config. B | 1.03 | 0.03 | moderate |

| config. C | 2.59 | 0.14 | strong |

| config. D | 1.33 | 0.06 | strong |

| config. E | 1.46 | 0.09 | moderate |

Intensity histogram median absolute deviation¶

4RNL

Histogram median absolute deviation is conceptually similar to histogram mean absolute deviation, but measures dispersion from the median instead of mean. Thus:

Here, median \(M = F_{\mathit{ih.median}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.15 | — | very strong |

| config. A | 2.58 | 0.05 | strong |

| config. B | 2.28 | 0.02 | strong |

| config. C | 4.75 | 0.12 | strong |

| config. D | 2.41 | 0.04 | strong |

| config. E | 2.89 | 0.07 | strong |

Intensity histogram coefficient of variation¶

CWYJ

The coefficient of variation measures the dispersion of the discretised intensity distribution. It is defined as:

Here \(\sigma={F_{\mathit{ih.var}}}^{1/2}\) and \(\mu=F_{\mathit{ih.mean}}\) are the standard deviation and mean of the discretised intensity distribution, respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.812 | — | very strong |

| config. A | 0.227 | 0.004 | strong |

| config. B | 0.229 | 0.004 | strong |

| config. C | 0.234 | 0.005 | strong |

| config. D | 0.252 | 0.006 | strong |

| config. E | 0.254 | 0.006 | strong |

Intensity histogram quartile coefficient of dispersion¶

SLWD

The quartile coefficient of dispersion is a more robust alternative to the intensity histogram coefficient of variance. It is defined as:

\(P_{25}\) and \(P_{75}\) are the 25th and 75th percentile of \(\mathbf{X}_{d}\), respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.6 | — | very strong |

| config. A | 0.0455 | — | strong |

| config. B | 0.05 | 0.0005 | strong |

| config. C | 0.0361 | 0.0027 | strong |

| config. D | 0.05 | 0.0021 | strong |

| config. E | 0.0213 | 0.0015 | strong |

Discretised intensity entropy¶

TLU2

Entropy [Aerts2014] is an information-theoretic concept that gives a metric for the information contained within \(\mathbf{X}_{d}\). The particular metric used is Shannon entropy, which is defined as:

Note that entropy can only be meaningfully defined for discretised intensities as it will tend to \(-\log_2 N_v\) for continuous intensity distributions.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1.27 | — | very strong |

| config. A | 3.36 | 0.03 | very strong |

| config. B | 3.16 | 0.01 | strong |

| config. C | 3.73 | 0.04 | strong |

| config. D | 2.94 | 0.01 | strong |

| config. E | 3.22 | 0.02 | strong |

Discretised intensity uniformity¶

BJ5W

Uniformity [Aerts2014] of \(\mathbf{X}_{d}\) is defined as:

For histograms where most intensities are contained in a single bin, uniformity approaches \(1\). The lower bound is \(1/N_{g}\).

Note that this feature is sometimes referred to as energy.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.512 | — | very strong |

| config. A | 0.15 | 0.002 | very strong |

| config. B | 0.174 | 0.001 | strong |

| config. C | 0.14 | 0.003 | strong |

| config. D | 0.229 | 0.003 | strong |

| config. E | 0.184 | 0.001 | strong |

Maximum histogram gradient¶

12CE

The histogram gradient \(\mathbf{H}'\) of intensity histogram \(\mathbf{H}\) can be calculated as:

Histogram \(\mathbf{H}\) should be non-sparse, i.e. bins where \(n_i=0\) should not be omitted. Ostensibly, the histogram gradient can be calculated in different ways. The method above has the advantages of being easy to implement and leading to a gradient \(\mathbf{H}'\) with same size as \(\mathbf{H}\). This helps maintain a direct correspondence between the discretised intensities in \(\mathbf{H}\) and the bins of \(\mathbf{H}'\). The maximum histogram gradient [VanDijk2016] is:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 8 | — | very strong |

| config. A | \(1.1 \times 10^{4}\) | 100 | strong |

| config. B | \(3.22 \times 10^{3}\) | 50 | strong |

| config. C | \(4.75 \times 10^{3}\) | 30 | strong |

| config. D | \(7.26 \times 10^{3}\) | 200 | strong |

| config. E | \(6.01 \times 10^{3}\) | 130 | strong |

Maximum histogram gradient intensity¶

8E6O

The maximum histogram gradient intensity [VanDijk2016] \(F_{\mathit{ih.max.grad.gl}}\) is the discretised intensity corresponding to the maximum histogram gradient, i.e. the value \(i\) in \(\mathbf{H}\) for which \(\mathbf{H}'\) is maximal.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3 | — | strong |

| config. A | 21 | — | strong |

| config. B | 19 | 0.3 | strong |

| config. C | 41 | — | strong |

| config. D | 19 | 0.4 | strong |

| config. E | 23 | 0.2 | moderate |

Minimum histogram gradient¶

VQB3

The minimum histogram gradient [VanDijk2016] is:

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | \(-\)50 | — | very strong |

| config. A | \(-1.01 \times 10^{4}\) | 100 | strong |

| config. B | \(-3.02 \times 10^{3}\) | 50 | strong |

| config. C | \(-4.68 \times 10^{3}\) | 50 | strong |

| config. D | \(-6.67 \times 10^{3}\) | 230 | strong |

| config. E | \(-6.11 \times 10^{3}\) | 180 | strong |

Minimum histogram gradient intensity¶

RHQZ

The minimum histogram gradient intensity [VanDijk2016] \(F_{\mathit{ih.min.grad.gl}}\) is the discretised intensity corresponding to the minimum histogram gradient, i.e. the value \(i\) in \(\mathbf{H}\) for which \(\mathbf{H}'\) is minimal.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 1 | — | strong |

| config. A | 24 | — | strong |

| config. B | 22 | 0.3 | strong |

| config. C | 44 | — | strong |

| config. D | 22 | 0.4 | strong |

| config. E | 25 | 0.2 | strong |

Intensity-volume histogram features¶

P88C

The (cumulative) intensity-volume histogram (IVH) of the set \(\mathbf{X}_{gl}\) of voxel intensities in the ROI intensity mask describes the relationship between discretised intensity \(i\) and the fraction of the volume containing at least intensity \(i\), \(\nu\) [ElNaqa2009].

Depending on the imaging modality, the calculation of IVH features requires discretising \(\mathbf{X}_{gl}\) to generate a new voxel set \(\mathbf{X}_{d,gl}\) with discretised intensities. Moreover, the total range \(\mathbf{G}\) of discretised intensities and the discretisation interval \(w_d\) should be provided or determined. The total range \(\mathbf{G}\) determines the range of discretised intensities to be included in the IVH, whereas the discretisation interval determines the intensity difference between adjacent discretised intensities in the IVH.

Recommendations for discretisation parameters differ depending on what type of data the image represents, and how it is represented. These recommendations are described below.

Discrete calibrated image intensities¶

Some imaging modalities by default generate voxels with calibrated, discrete intensities – for example CT. In this case, the discretised ROI voxel set \(\mathbf{X}_{d,gl}=\mathbf{X}_{gl}\), i.e. no discretisation required. If a re-segmentation range is provided (see Section Re-segmentation), the total range \(\mathbf{G}\) is equal to the re-segmentation range. In the case of a half-open re-segmentation range, the upper limit of the range is \(\text{max}(\mathbf{X}_{gl})\). When no range is provided, \(\mathbf{G}=[\text{min}(\mathbf{X}_{gl}),\text{max}(\mathbf{X}_{gl})]\). The discretisation interval is \(w_d=1\).

Continuous calibrated image intensities¶

Imaging with calibrated, continuous intensities such as PET requires discretisation to determine the IVH, while preserving the quantitative intensity information. The use of a fixed bin size discretisation method is thus recommended (see Intensity discretisation). This method requires a minimum intensity \(X_{gl,min}\), a maximum intensity \(X_{gl,max}\) and the bin width \(w_b\). If a re-segmentation range is defined (see Re-segmentation), \(X_{gl,min}\) is set to the lower bound of the re-segmentation range and \(X_{gl,max}\) to the upper bound; otherwise \(X_{gl,min} = \text{min}(\mathbf{X}_{gl})\) and \(X_{gl,max} = \text{max}(\mathbf{X}_{gl})\) (i.e. the minimum and maximum intensities in the imaging volume prior to discretisation). The bin width \(w_b\) is modality dependent, but should be small relative to the intensity range, e.g. 0.10 SUV for 18F-FDG-PET.

Next, fixed bin size discretisation produces the voxel set \(\mathbf{X}_{d}\) of bin numbers, which needs to be converted to bin centers in order to maintain a direct relationship with the original intensities. We thus replace bin numbers \(\mathbf{X}_{d}\) with the intensity corresponding to the bin center:

The total range is then \(\mathbf{G}=[X_{gl,min}+0.5w_b, X_{gl,max}-0.5w_b]\). In this case, the discretisation interval matches the bin width, i.e. \(w_d=w_b\).

Arbitrary intensity units¶

Some imaging modalities, such as many MRI sequences, produce arbitrary intensities. In such cases, a fixed bin number discretisation method with \(N_g=1000\) bins is recommended (see Intensity discretisation). The discretisation bin width is \(w_b=\left(X_{gl,max}-X_{gl,min}\right)/N_g\), with \(X_{gl,max}=\text{max}\left(\mathbf{X}_{gl}\right)\) and \(X_{gl,min}=\text{min}\left(\mathbf{X}_{gl}\right)\), as re-segmentation ranges generally cannot be provided for non-calibrated intensities. The fixed bin number discretisation produces the voxel set \(\mathbf{X}_{d} \in \{1,2,\ldots,N_g\}\). Because of the lack of calibration, \(\mathbf{X}_{d,gl}=\mathbf{X}_{d}\), and consequentially the discretisation interval is \(w_d=1\) and the total range is \(\mathbf{G}=[1,N_g]\)

Calculating the IV histogram¶

We use \(\mathbf{X}_{d,gl}\) to calculate fractional volumes and fractional intensities.

As voxels for the same image stack generally all have the same dimensions, we may define fractional volume \(\nu\) for discretised intensity \(i\):

Here \(\left[\ldots\right]\) is an Iverson bracket, yielding \(1\) if the condition is true and \(0\) otherwise. In essence, we count the voxels containing a discretised intensity smaller than \(i\), divide by the total number of voxels, and then subtract this volume fraction to find \(\nu_i\).

The intensity fraction \(\gamma\) for discretised intensity \(i\) in the range \(\mathbf{G}\) is calculated as:

Note that intensity fractions are also calculated for discretised intensities that are absent in \(\mathbf{X}_{d,gl}\). For example intensities 2 and 5 are absent in the digital phantom (see Reference data sets Chapter), but are still evaluated to determine both the fractional volume and the intensity fraction. An example IVH for the digital phantom is shown in Table 71.

| \(i\) | \(\gamma\) | \(\nu\) |

|---|---|---|

| 1 | 0.0 | 1.000 |

| 2 | 0.2 | 0.324 |

| 3 | 0.4 | 0.324 |

| 4 | 0.6 | 0.311 |

| 5 | 0.8 | 0.095 |

| 6 | 1.0 | 0.095 |

Aggregating features¶

We recommend calculating intensity-volume histogram features using the 3D volume (DHQ4). Computing features per slice and subsequently averaging (3IDG) is not recommended.

Volume at intensity fraction¶

BC2M

The volume at intensity fraction \(V_x\) is the largest volume fraction \(\nu\) that has an intensity fraction \(\gamma\) of at least \(x\%\). This differs from conceptually similar dose-volume histograms used in radiotherapy planning, where \(V_{10}\) would indicate the volume fraction receiving at least 10 Gy planned dose. [ElNaqa2009] defined both \(V_{10}\) and \(V_{90}\) as features. In the context of this work, these two features are defined as \(F_{\mathit{ivh.V10}}\) and \(F_{\mathit{ivh.V90}}\), respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.324 | — | very strong |

| config. A | 0.978 | 0.001 | strong |

| config. B | 0.977 | 0.001 | strong |

| config. C | 0.998 | 0.001 | moderate |

| config. D | 0.972 | 0.003 | strong |

| config. E | 0.975 | 0.002 | strong |

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.0946 | — | very strong |

| config. A | \(6.98 \times 10^{-5}\) | \(1.03 \times 10^{-5}\) | strong |

| config. B | \(7.31 \times 10^{-5}\) | \(1.03 \times 10^{-5}\) | strong |

| config. C | 0.000152 | \(2 \times 10^{-5}\) | strong |

| config. D | \(9 \times 10^{-5}\) | 0.000415 | strong |

| config. E | 0.000157 | 0.000248 | strong |

Intensity at volume fraction¶

GBPN

The intensity at volume fraction \(I_x\) is the minimum discretised intensity \(i\) present in at most \(x\%\) of the volume. [ElNaqa2009] defined both \(I_{10}\) and \(I_{90}\) as features. In the context of this work, these two features are defined as \(F_{\mathit{ivh.I10}}\) and \(F_{\mathit{ivh.I90}}\), respectively.

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 5 | — | very strong |

| config. A | 96 | — | strong |

| config. B | 92 | — | strong |

| config. C | 88.8 | 0.2 | moderate |

| config. D | 87 | 0.1 | strong |

| config. E | 770 | 5 | moderate |

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 2 | — | very strong |

| config. A | \(-\)128 | 8 | strong |

| config. B | \(-\)135 | 8 | strong |

| config. C | \(-\)421 | 14 | strong |

| config. D | \(-\)303 | 20 | strong |

| config. E | 399 | 17 | moderate |

Volume fraction difference between intensity fractions¶

DDTU

This feature is the difference between the volume fractions at two different intensity fractions, e.g. \(V_{10}-V_{90}\) [ElNaqa2009]. In the context of this work, this feature is defined as \(F_{\mathit{ivh.V10minusV90}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 0.23 | — | very strong |

| config. A | 0.978 | 0.001 | strong |

| config. B | 0.977 | 0.001 | strong |

| config. C | 0.997 | 0.001 | strong |

| config. D | 0.971 | 0.001 | strong |

| config. E | 0.974 | 0.001 | strong |

Intensity fraction difference between volume fractions¶

CNV2

This feature is the difference between discretised intensities at two different fractional volumes, e.g. \(I_{10} - I_{90}\) [ElNaqa2009]. In the context of this work, this feature is defined as \(F_{\mathit{ivh.I10minusI90}}\).

| data | value | tol. | consensus |

|---|---|---|---|

| dig. phantom | 3 | — | very strong |

| config. A | 224 | 8 | strong |

| config. B | 227 | 8 | strong |

| config. C | 510 | 14 | strong |

| config. D | 390 | 20 | strong |

| config. E | 371 | 13 | moderate |

Area under the IVH curve¶

9CMM

Note: This feature currently has no reference values and should not be used.

The area under the IVH curve \(F_{\mathit{ivh.auc}}\) was defined by [VanVelden2011]. The area under the IVH curve can be approximated by calculating the Riemann sum using the trapezoidal rule. Note that if there is only one discretised intensity in the ROI, we define the area under the IVH curve \(F_{\mathit{ivh.auc}}=0\).

Grey level co-occurrence based features¶

LFYI

In image analysis, texture is one of the defining sets of features. Texture features were originally designed to assess surface texture in 2D images. Texture analysis is however not restricted to 2D slices and can be extended to 3D objects. Image intensities are generally discretised before calculation of texture features (see Intensity discretisation).

The grey level co-occurrence matrix (GLCM) is a matrix that expresses how combinations of discretised intensities (grey levels) of neighbouring pixels, or voxels in a 3D volume, are distributed along one of the image directions. Generally, the neighbourhood for GLCM is a 26-connected neighbourhood in 3D and a 8-connected neighbourhood in 2D. Thus, in 3D there are 13 unique direction vectors within the neighbourhood for Chebyshev distance \(\delta=1\), i.e. \((0,0,1)\), \((0,1,0)\), \((1,0,0)\), \((0,1,1)\), \((0,1,-1)\), \((1,0,1)\), \((1,0,-1)\), \((1,1,0)\), \((1,-1,0)\), \((1,1,1)\), \((1,1,-1)\), \((1,-1,1)\) and \((1,-1,-1)\), whereas in 2D the direction vectors are \((1,0,0)\), \((1,1,0)\), \((0,1,0)\) and \((-1,1,0)\).

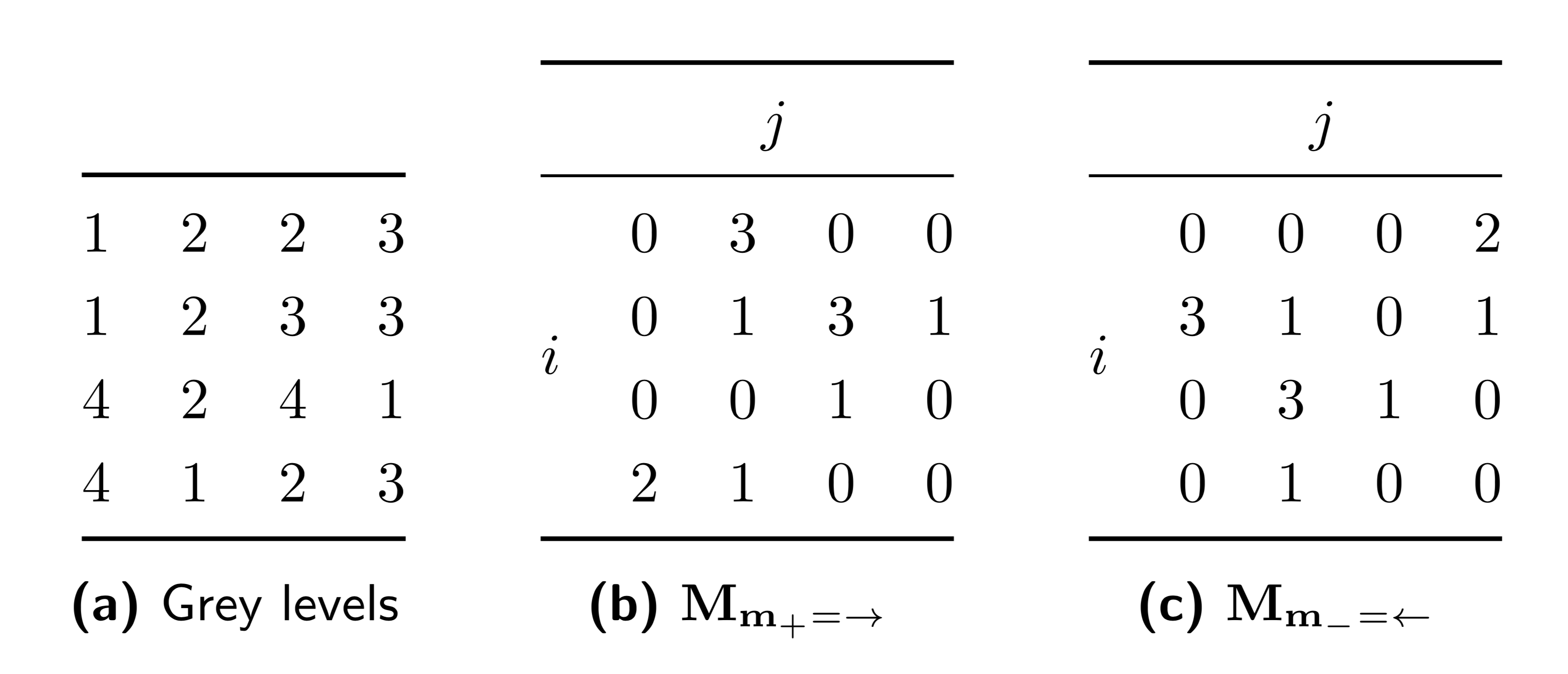

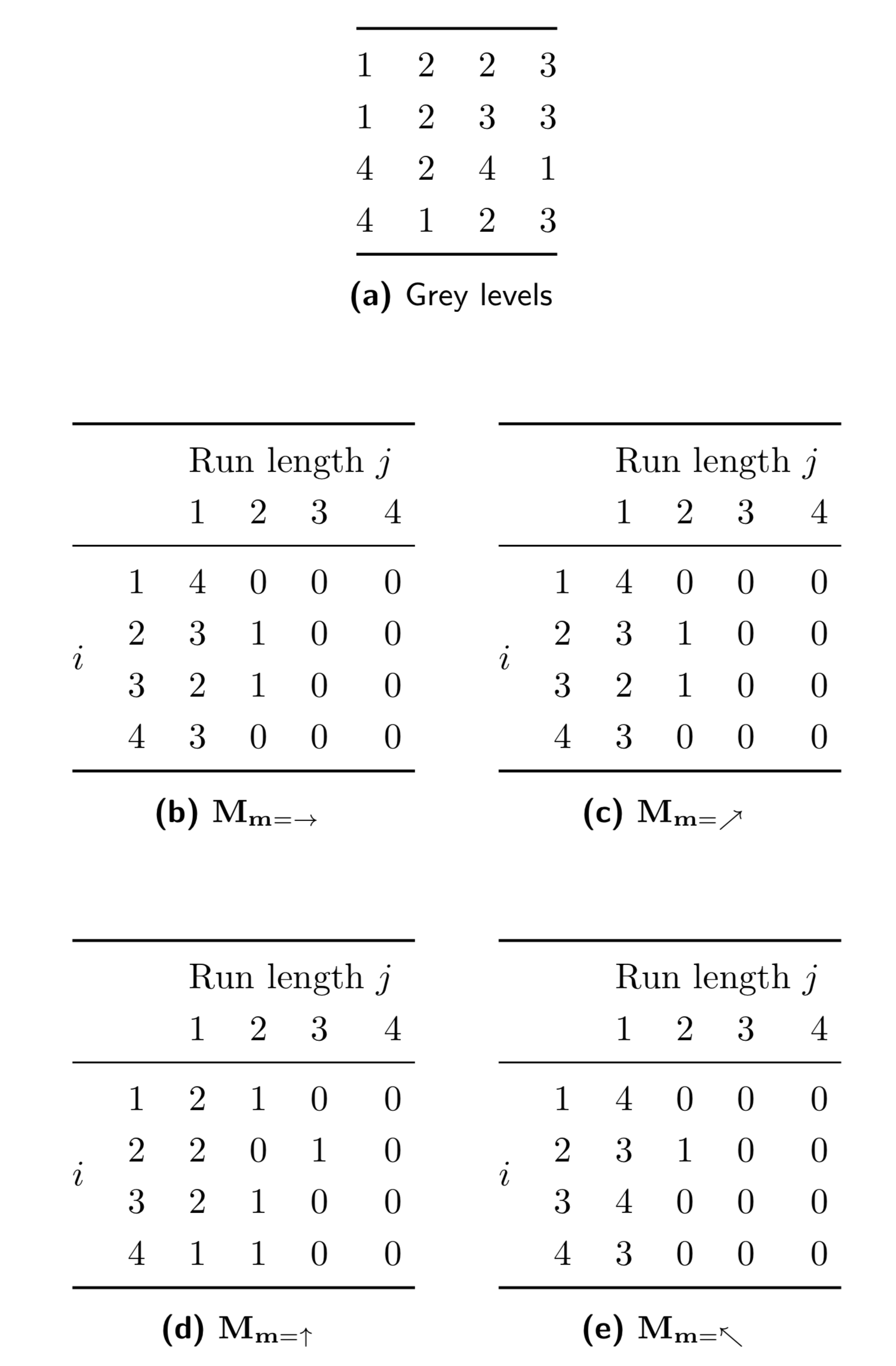

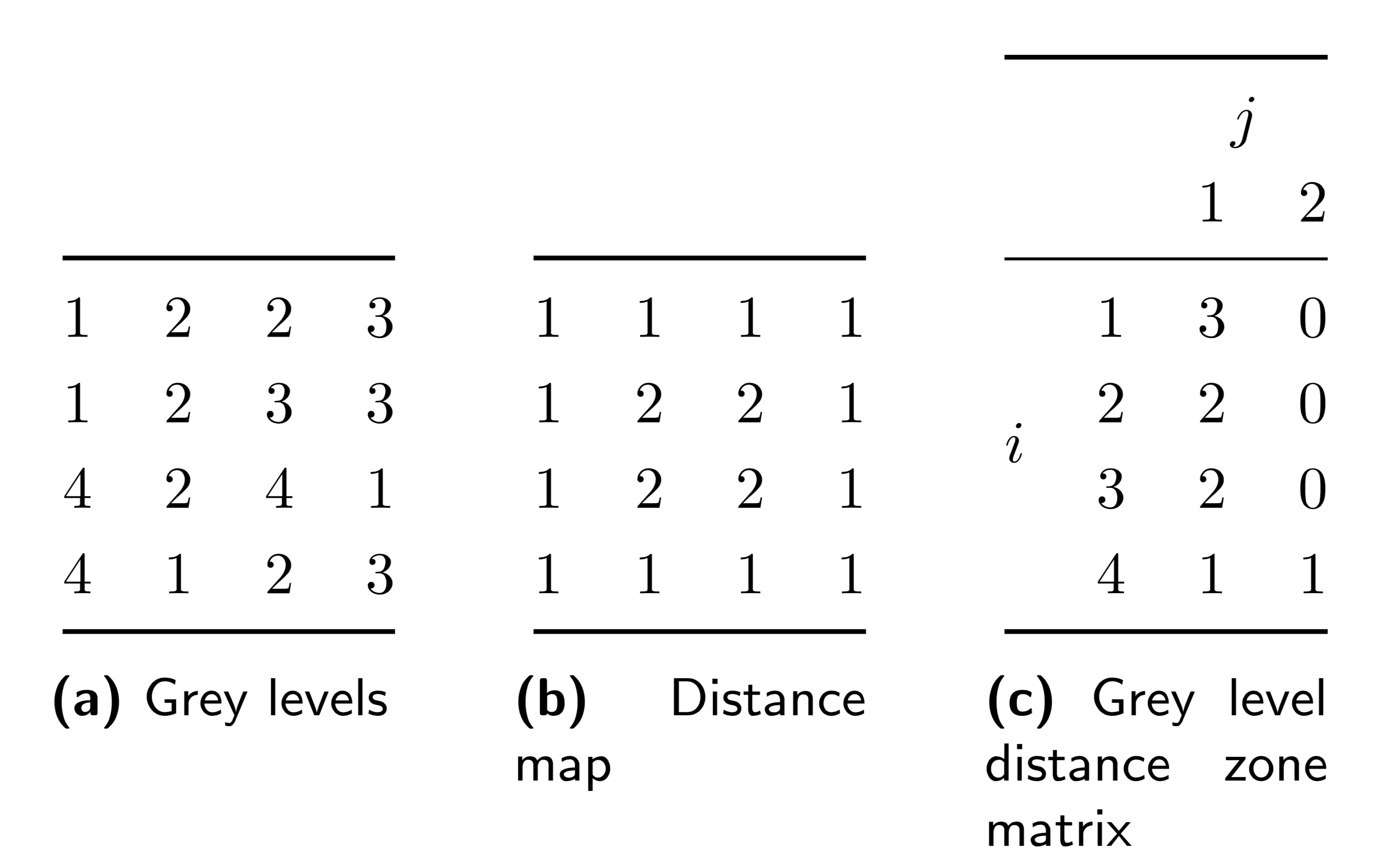

A GLCM is calculated for each direction vector, as follows. Let \(\mathbf{M}_{\mathbf{m}}\) be the \(N_g \times N_g\) grey level co-occurrence matrix, with \(N_g\) the number of discretised grey levels present in the ROI intensity mask, and \(\mathbf{m}\) the particular direction vector. Element \((i,j)\) of the GLCM contains the frequency at which combinations of discretised grey levels \(i\) and \(j\) occur in neighbouring voxels along direction \(\mathbf{m}_{+}=\mathbf{m}\) and along direction \(\mathbf{m}_{-}= -\mathbf{m}\). Then, \(\mathbf{M}_{\mathbf{m}} = \mathbf{M}_{\mathbf{m}_{+}} + \mathbf{M}_{\mathbf{m}_{-}} = \mathbf{M}_{\mathbf{m}_{+}} + \mathbf{M}_{\mathbf{m}_{+}}^T\) [Haralick1973]. As a consequence the GLCM matrix \(\mathbf{M}_{\mathbf{m}}\) is symmetric. An example of the calculation of a GLCM is shown in Fig. 10. Corresponding grey level co-occurrence matrices for each direction are shown in Fig. 11.

Fig. 10 Grey levels (a) and corresponding grey level co-occurrence matrices for the 0◦ (b) and 180◦ directions (c). In vector notation these directions are \(\mathbf{m_{+}} = (1, 0)\) and \(\mathbf{m_{-}}\) = (−1, 0). To calculate the symmetrical co-occurrence matrix \(\mathbf{M}_{\mathbf{m}}\) both matrices are summed by element.

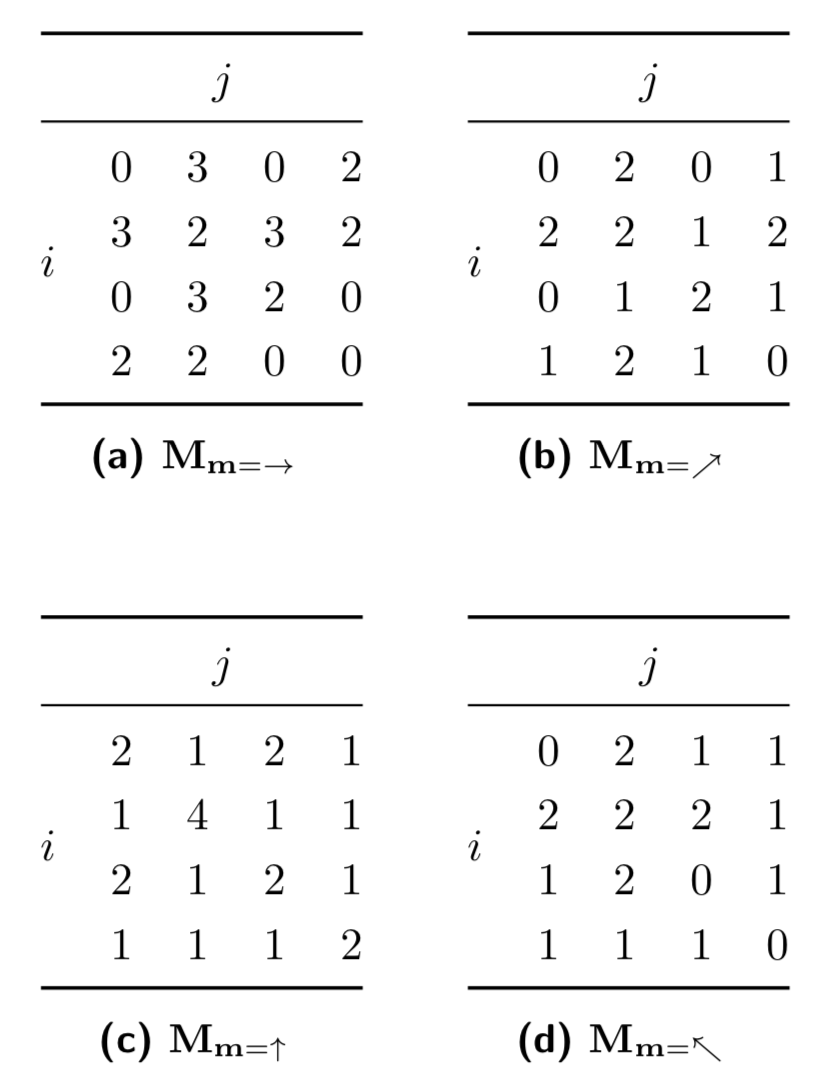

Fig. 11 Grey level co-occurrence matrices for the 0◦ (a), 45◦ (b), 90◦ (c) and 135◦ (d) directions. In vector notation these directions are \(\mathbf{m} = (1, 0)\), \(\mathbf{m} = (1, 1)\), \(\mathbf{m} = (0, 1)\) and \(\mathbf{m} = (−1, 1)\), respectively.

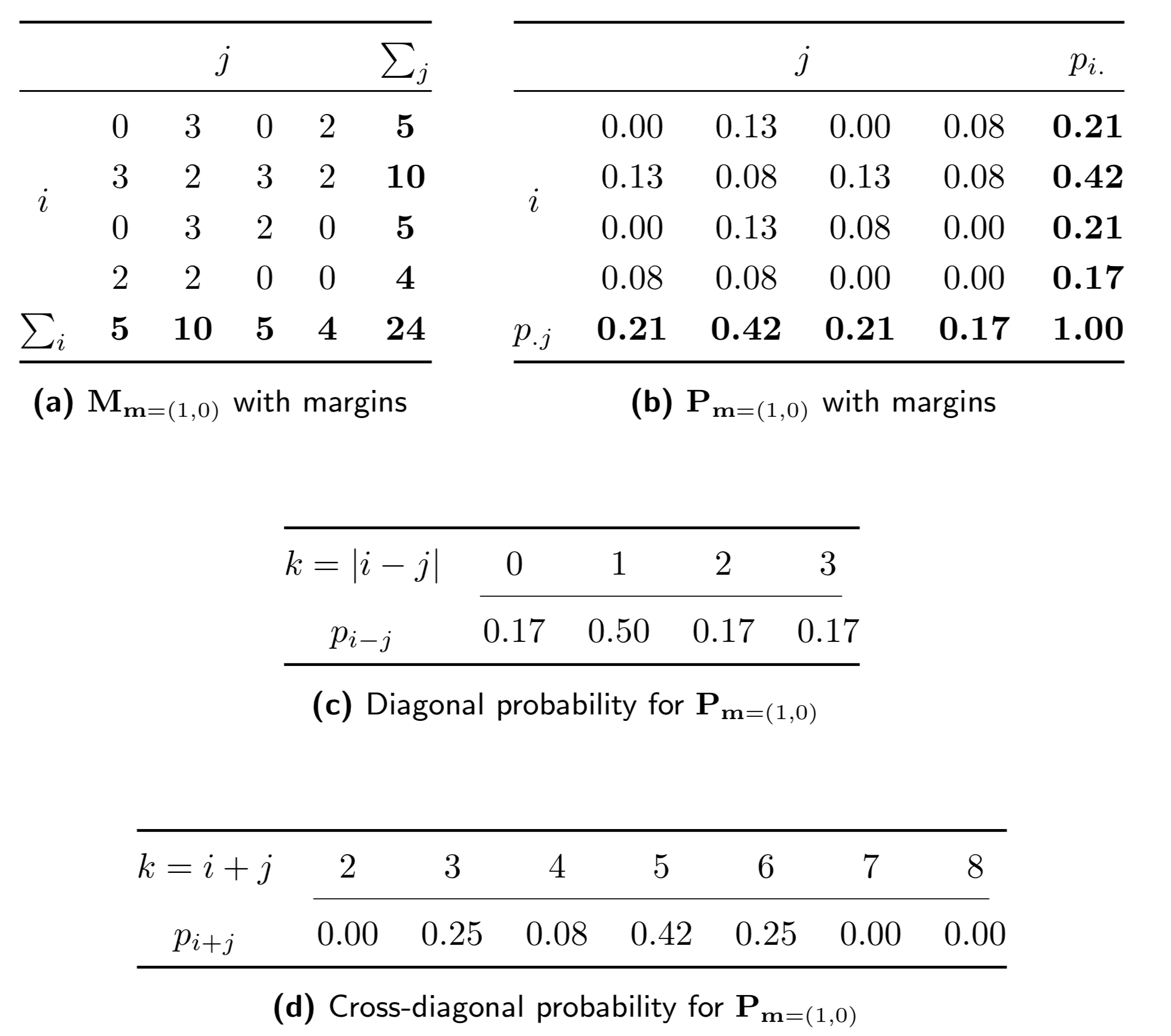

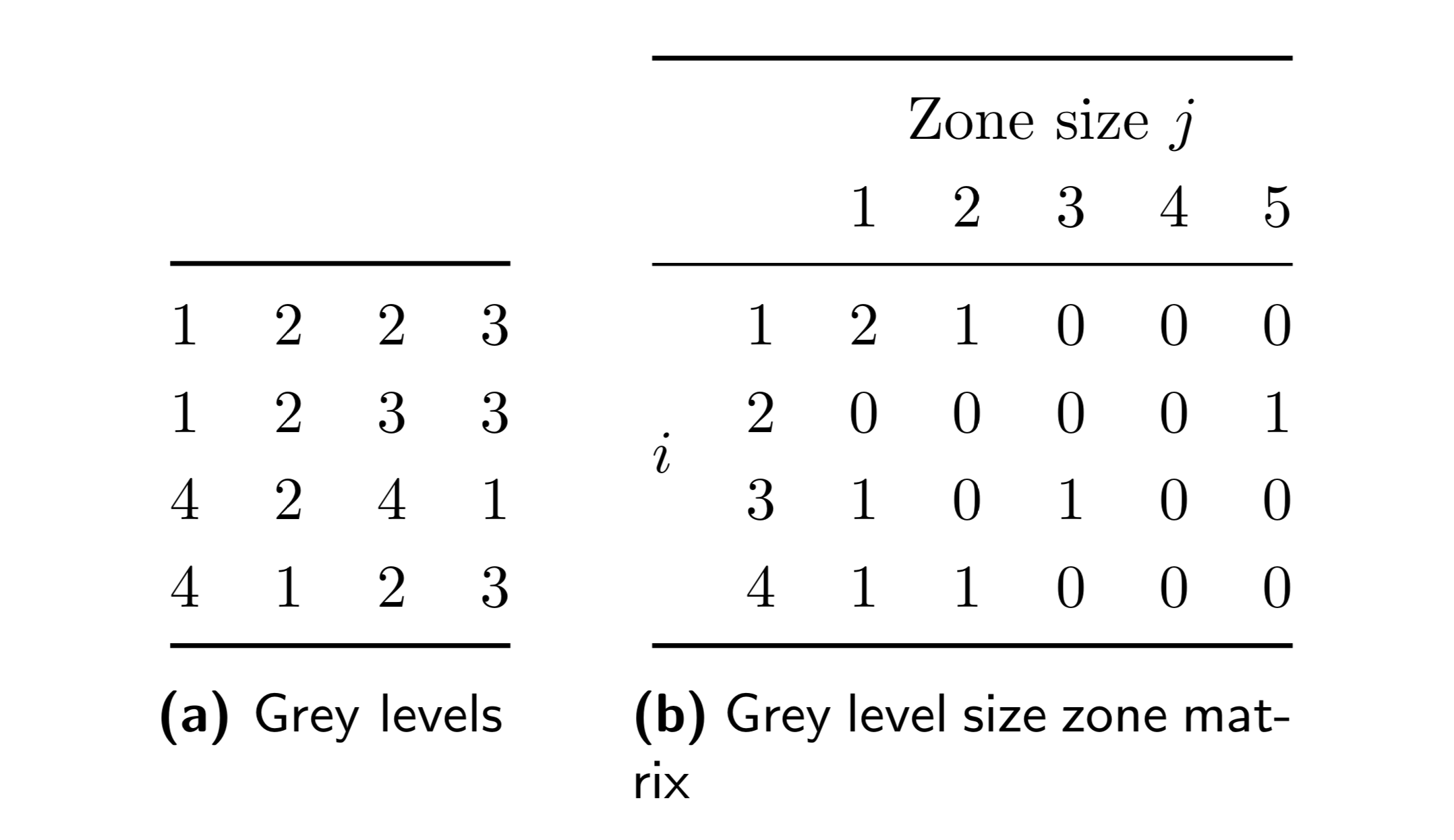

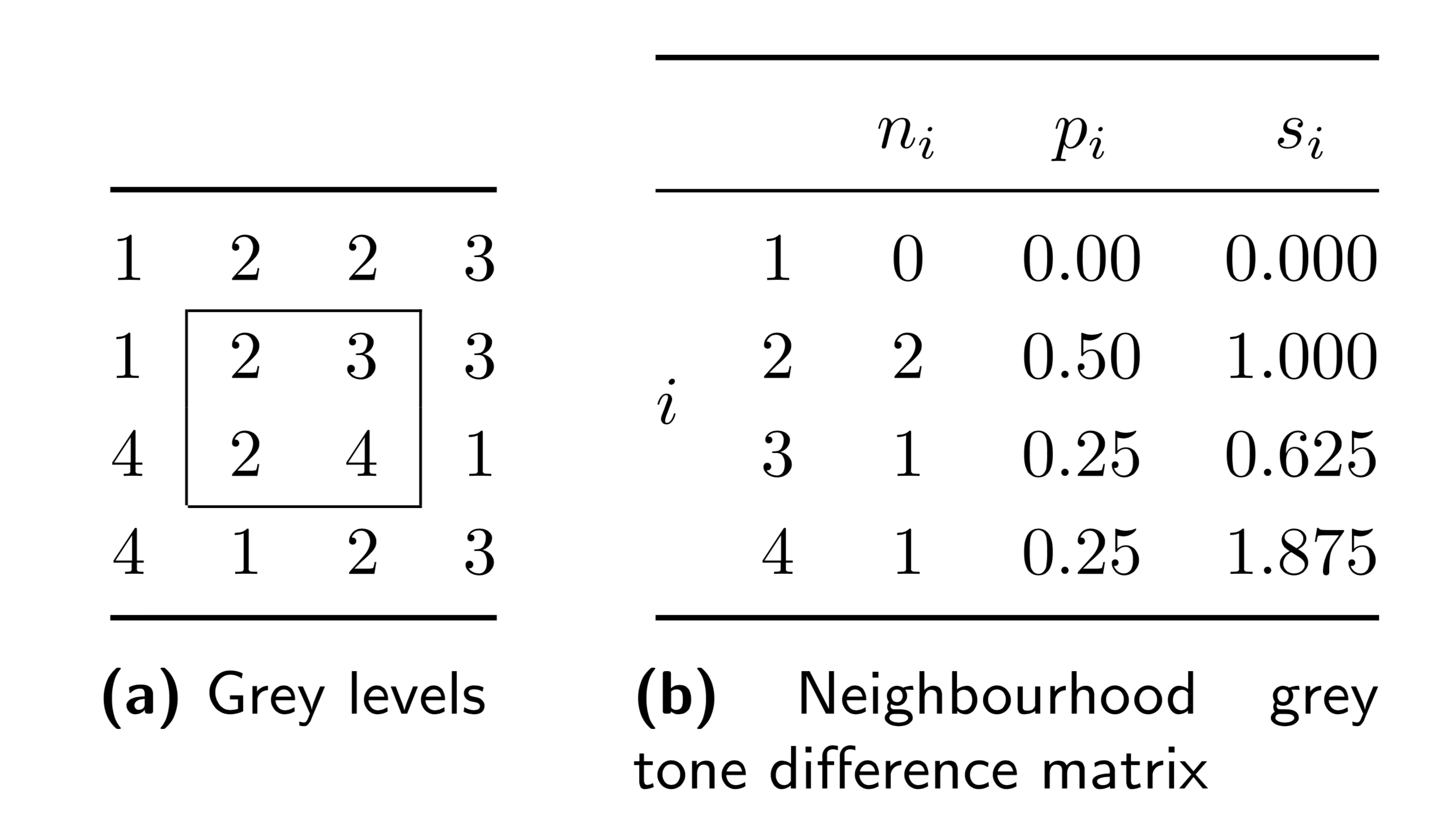

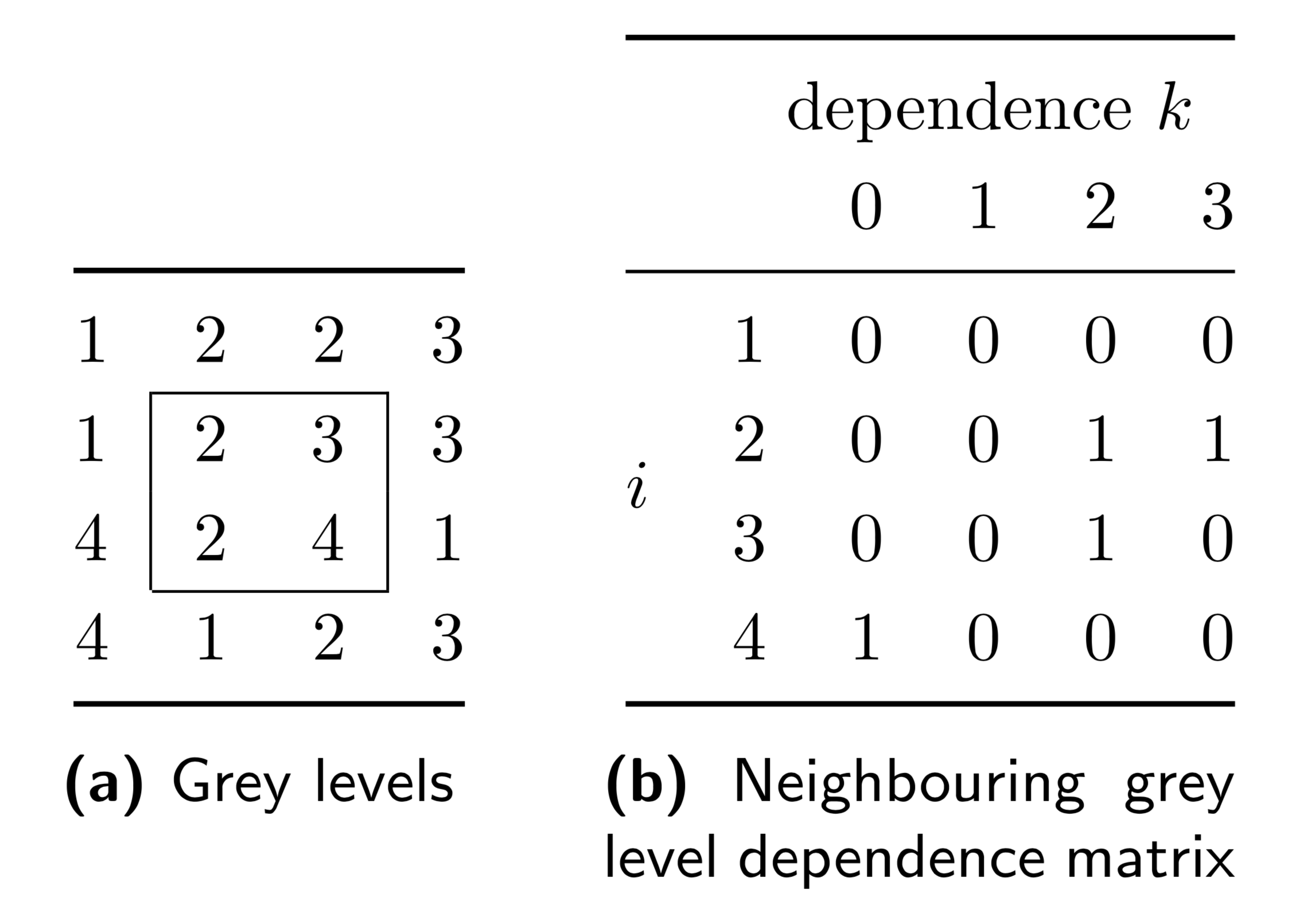

GLCM features rely on the probability distribution for the elements of the GLCM. Let us consider \(\mathbf{M}_{\mathbf{m}=(1,0)}\) from the example, as shown in Fig. 12. We derive a probability distribution for grey level co-occurrences, \(\mathbf{P}_{\mathbf{m}}\), by normalising \(\mathbf{M}_{\mathbf{m}}\) by the sum of its elements. Each element \(p_{ij}\) of \(\mathbf{P}_{\mathbf{m}}\) is then the joint probability of grey levels \(i\) and \(j\) occurring in neighbouring voxels along direction \(\mathbf{m}\). Then \(p_{i.} = \sum_{j=1}^{N_g} p_{ij}\) is the row marginal probability, and \(p_{.j}=\sum_{i=1}^{N_g} p_{ij}\) is the column marginal probability. As \(\mathbf{P}_{\mathbf{m}}\) is by definition symmetric, \(p_{i.} = p_{.j}\). Furthermore, let us consider diagonal and cross-diagonal probabilities \(p_{i-j}\) and \(p_{i+j}\) [Haralick1973][Unser1986]:

Here, \(\left[\ldots\right]\) is an Iverson bracket, which equals \(1\) when the condition within the brackets is true and \(0\) otherwise. In effect we select only combinations of elements \((i,j)\) for which the condition holds.